问题现象描述

系统环境:

Hardware Environment(Ascend/GPU/CPU): Ascend Software Environment: -- MindSpore version (source or binary): 1.6.0 -- Python version (e.g., Python 3.7.5): 3.7.6 -- OS platform and distribution (e.g., Linux Ubuntu 16.04): Ubuntu 4.15.0-74-generic -- GCC/Compiler version (if compiled from source):

训练脚本是通过构建SoftmaxCrossEntropyWithLogits的单算子网络,计算两个变量softmax 交叉熵的例子。脚本如下:

class Net(nn.Cell):

def __init__(self):

super(Net, self).__init__()

self.loss = nn.SoftmaxCrossEntropyWithLogits(sparse=False)

def construct(self, logits, labels):

output = self.loss(logits, labels)

return output

net = Net()

logits = Tensor(np.array([[[2, 4, 1, 4, 5], [2, 1, 2, 4, 3]]]), mindspore.float32)

labels = Tensor(np.array([[[0, 0, 0, 0, 1], [0, 0, 0, 1, 0]]]).astype(np.float32))

out = net(logits, labels)

print('out',out)报错信息:

Traceback (most recent call last): File 'demo.py', line 13, in <module> out = net(logits, labels) … ValueError: mindspore/core/utils/check_convert_utils.cc:395 CheckInteger] For primitive[SoftmaxCrossEntropyWithLogits], the dimension of logits must be equal to 2, but got 3. The function call stack (See file ' rank_0/om/analyze_fail.dat' for more details): \# 0 In file demo.py(04) output = self.loss(logits, labels)

原因分析

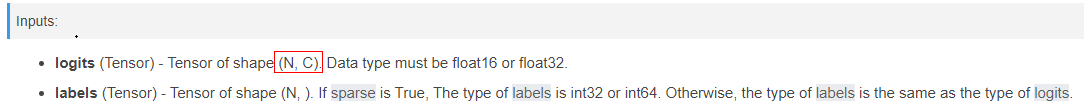

在报错信息ValueError中,For primitive[SoftmaxCrossEntropyWithLogits], the dimension of logits must be equal to 2, but got 3,表示传入logits应该等于2维,但实际传入的logits的Shape是3维,查看官网对logits的描述可知,支持的Shape为(N,C)。如下图所示。

对于3维数据,建议先reshape成2维(N*L, C),然后再调用nn.SoftmaxCrossEntropyWithLogits接口,执行完后再reshape回 (N, 1)。

参考文档:SoftmaxCrossEntropyWithLogits算子API接口

解决措施

基于以上原因,修改训练脚本:

class Net(nn.Cell):

def __init__(self):

super(Net, self).__init__()

self.loss = nn.SoftmaxCrossEntropyWithLogits(sparse=False)

def construct(self, logits, labels):

output = self.loss(logits, labels)

return output

net = Net()

logits = Tensor(np.array([[[2, 4, 1, 4, 5], [2, 1, 2, 4, 3]]]), mindspore.float32)

labels = Tensor(np.array([[[0, 0, 0, 0, 1], [0, 0, 0, 1, 0]]]).astype(np.float32))

L, N, C = logits.shape

logits,labels = logits.reshape(L*N, C),labels.reshape(L*N, C)

out = net(logits, labels)

out = out.reshape(N,1)

print('out',out)执行训练。成功后输出如下:

out [[0.5899297 ] [0.52374405]]