Challenges

High Computing Costs

With over 10 million cards expected, compute capacity is expanding to meet growing training needs and surging inference demand.

Rapid Application Innovation

AI drives rapid innovation in Internet foundation models and applications, with frequent releases in mainstream ToC scenarios—some already commercialized.

High Performance Required

Internet services—including intelligent assistants, search, and multimodal applications—depend on low-latency, high-throughput AI platforms.

Solutions

LLM

Ultimate experience of fast, easy, and accurate training and inference

Multimodal

High performance in understanding and generation scenarios

SRA

High availability, strong generalization, and ease of use

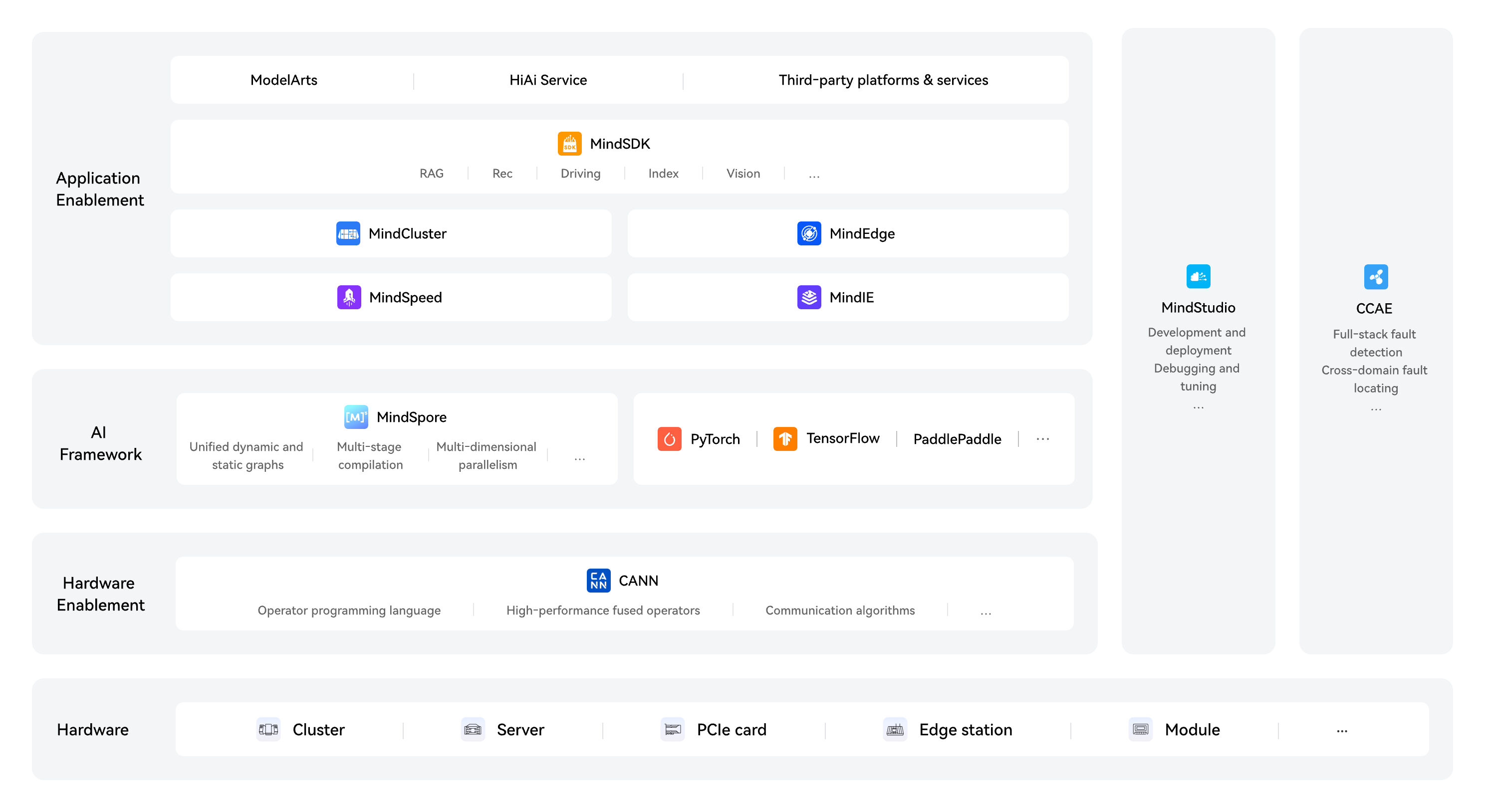

Related Products

Application Scenarios

Intelligent Assistant

Supports 100B-parameter model inference, with millions of active users and hundreds of millions of daily requests.

Supports high-performance and low-latency dialog interactions.

SRA

Enhances generalization to boost performance in typical recommendation models.

Speeds up innovation in generative recommendation for the Internet industry.

Smart Office

Delivers a fast, user-friendly inference solution for document typesetting, copywriting, slide creation, and formula layout.

Text-to-Image Generation

Generates images in seconds, streamlining poster design and avatar creation.

Delivers strong performance for fast text generation.

Lowers staffing costs for design and customer support teams.