DoubleBuffer场景

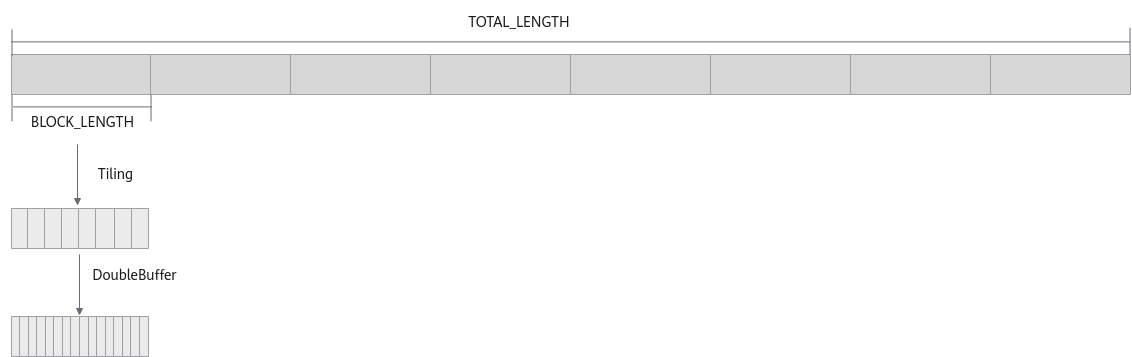

因存在算子中多次搬入搬出数据的场景,为充分利用硬件资源,实现多流水并行,引入DoubleBuffer机制。DoubleBuffer是通过将输入数据分成大小相等的两块,充分利用AI Core的硬件资源,实现数据搬入、计算、数据搬出的并行执行方式。下面以“核间不均分,核内不均分”的样例为例,介绍算子中DoubleBuffer的实现,完整样例代码请参见使用DoubleBuffer的Add算子样例。

Tiling实现

使能DoubleBuffer后,每一个数据块会分成大小相等的两块,因此,若要使能DoubleBuffer,要求数据总量应该能够均分。为了简化处理,将可用的Unified Buffer空间以32字节为粒度,分成n块dataBlock,如果n不是偶数,则减1,这样就可以保证一套代码兼容开启或不开启DoubleBuffer功能。对应步骤如下:

- 判断数据总长度totalLength是否满足32字节对齐,如不满足,则计算totalLength向上32字节对齐后的长度totalLengthAligned。

1 2 3 4 5 6

constexpr uint32_t BLOCK_SIZE = 32; // 为方便计算,这里根据数据类型定义变量alignNum作为对齐数 uint32_t alignNum = BLOCK_SIZE / dataTypeSize; // totalLength为数据总量 uint32_t totalLengthAligned = (totalLength % alignNum == 0)? totalLength : ((totalLength + alignNum - 1) / alignNum) * alignNum;

- 根据totalLengthAligned,计算每个核的计算数据长度blockLength,分核策略可参照尾核Tiling。

- 计算其余Tiling参数。对当前Unified Buffer可用空间以32字节为粒度,进行切分,计算出数据块个数UB_BLOCK_NUM。根据是否开启DoubleBuffer计算出当前可用的最大数据块个数,记作MAX_AVAILABLE_UB_BLOCK_NUM。最后,以MAX_AVAILABLE_UB_BLOCK_NUM为粒度,对blockLength进行切分。为方便演示,如下代码直接给出UB_BLOCK_NUM,作为当前Unified Buffer可用空间包含的block(32字节)数。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21

constexpr uint32_t BUFFER_NUM = 2; constexpr uint32_t UB_BLOCK_NUM = 21; // UB最大可以使用的block数量 constexpr uint32_t MAX_AVAILABLE_UB_BLOCK_NUM = UB_BLOCK_NUM / BUFFER_NUM * BUFFER_NUM; tileNum = blockLength / (alignNum * MAX_AVAILABLE_UB_BLOCK_NUM); if ((blockLength / alignNum) % MAX_AVAILABLE_UB_BLOCK_NUM == 0 || tileNum == 0) { if (tileNum == 0) { tileNum = 1; } if (blockLength < MAX_AVAILABLE_UB_BLOCK_NUM * alignNum) { tileLength = ((blockLength / alignNum) + 1) / BUFFER_NUM * BUFFER_NUM * alignNum; lastTileLength = tileLength; } else { tileLength = MAX_AVAILABLE_UB_BLOCK_NUM * alignNum; lastTileLength = (blockLength - (tileNum - 1) * tileLength); } } else { tileNum = tileNum + 1; tileLength = MAX_AVAILABLE_UB_BLOCK_NUM * alignNum; lastTileLength = (blockLength - (tileNum - 1) * tileLength); }

算子类实现

不开启DoubleBuffer时,只需要对每个核上最后一个分块的起始地址做处理;开启DoubleBuffer后,需要处理的数据块长度变成原来的一半,所以需要对最后两个数据块的起始地址做处理。

开启DoubleBuffer,参考InitBuffer接口函数原型,将num参数配置成2。

1 2 3 |

pipe.InitBuffer(inQueueX, BUFFER_NUM, this->tileLength * sizeof(dataType)); pipe.InitBuffer(inQueueY, BUFFER_NUM, this->tileLength * sizeof(dataType)); pipe.InitBuffer(outQueueZ, BUFFER_NUM, this->tileLength * sizeof(dataType)); |

同时在计算核内每个数据块的长度时,考虑DoubleBuffer场景,需要将Buffer数量,即BUFFER_NUM=2带入计算。

1

|

this->tileLength = tiling.tileLength / BUFFER_NUM; |

由于无法保证尾块满足DoubleBuffer的条件,因此不对尾块进行切分。

1

|

this->lastTileLength = tiling.lastTileLength; |

Init函数实现代码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

__aicore__ inline void Init(GM_ADDR x, GM_ADDR y, GM_ADDR z, AddCustomTilingData tiling) { if (tiling.isEvenCore) { this->blockLength = tiling.blockLength; this->tileNum = tiling.tileNum; this->tileLength = tiling.tileLength / BUFFER_NUM; this->lastTileLength = tiling.lastTileLength; xGm.SetGlobalBuffer((__gm__ dataType *)x + this->blockLength * AscendC::GetBlockIdx(), this->blockLength); yGm.SetGlobalBuffer((__gm__ dataType *)y + this->blockLength * AscendC::GetBlockIdx(), this->blockLength); zGm.SetGlobalBuffer((__gm__ dataType *)z + this->blockLength * AscendC::GetBlockIdx(), this->blockLength); } else { if (AscendC::GetBlockIdx() < tiling.formerNum) { this->tileNum = tiling.formerTileNum; this->tileLength = tiling.formerTileLength / BUFFER_NUM; this->lastTileLength = tiling.formerLastTileLength; xGm.SetGlobalBuffer((__gm__ dataType *)x + tiling.formerLength * AscendC::GetBlockIdx(), tiling.formerLength); yGm.SetGlobalBuffer((__gm__ dataType *)y + tiling.formerLength * AscendC::GetBlockIdx(), tiling.formerLength); zGm.SetGlobalBuffer((__gm__ dataType *)z + tiling.formerLength * AscendC::GetBlockIdx(), tiling.formerLength); } else { this->tileNum = tiling.tailTileNum; this->tileLength = tiling.tailTileLength / BUFFER_NUM; this->lastTileLength = tiling.tailLastTileLength; xGm.SetGlobalBuffer((__gm__ dataType *)x + tiling.formerLength * tiling.formerNum + tiling.tailLength * (AscendC::GetBlockIdx() - tiling.formerNum), tiling.tailLength); yGm.SetGlobalBuffer((__gm__ dataType *)y + tiling.formerLength * tiling.formerNum + tiling.tailLength * (AscendC::GetBlockIdx() - tiling.formerNum), tiling.tailLength); zGm.SetGlobalBuffer((__gm__ dataType *)z + tiling.formerLength * tiling.formerNum + tiling.tailLength * (AscendC::GetBlockIdx() - tiling.formerNum), tiling.tailLength); } } pipe.InitBuffer(inQueueX, BUFFER_NUM, this->tileLength * sizeof(dataType)); pipe.InitBuffer(inQueueY, BUFFER_NUM, this->tileLength * sizeof(dataType)); pipe.InitBuffer(outQueueZ, BUFFER_NUM, this->tileLength * sizeof(dataType)); } |

由于开启DoubleBuffer后,切分后的数据块个数翻倍,在Process函数中,需要将BUFFER_NUM带入计算。

1 2 3 4 5 6 7 8 9 10 |

__aicore__ inline void Process() { // loop count need to be doubled, due to DoubleBuffer constexpr int32_t loopCount = TILE_NUM * BUFFER_NUM; for (int32_t i = 0; i < loopCount; i++) { CopyIn(i); Compute(i); CopyOut(i); } } |

CopyIn函数、CopyOut函数需要对尾块进行单独处理。对于最后两个数据块,先向前偏移tileLength +lastTileLength索引,再使用tileLength作为单次计算量(仅作为参考,可能并非最佳)。

CopyIn函数实现代码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

__aicore__ inline void CopyIn(int32_t progress) { AscendC::LocalTensor<dataType> xLocal = inQueueX.AllocTensor<dataType>(); AscendC::LocalTensor<dataType> yLocal = inQueueY.AllocTensor<dataType>(); if ((progress == (this->tileNum * BUFFER_NUM - 2)) || (progress == (this->tileNum * BUFFER_NUM - 1))) { AscendC::DataCopy(xLocal, xGm[(progress - 2) * this->tileLength + this->lastTileLength], this->tileLength); AscendC::DataCopy(yLocal, yGm[(progress - 2) * this->tileLength + this->lastTileLength], this->tileLength); } else { AscendC::DataCopy(xLocal, xGm[progress * this->tileLength], this->tileLength); AscendC::DataCopy(yLocal, yGm[progress * this->tileLength], this->tileLength); } inQueueX.EnQue(xLocal); inQueueY.EnQue(yLocal); } |

CopyOut函数实现代码如下:

1 2 3 4 5 6 7 8 9 10 11 |

__aicore__ inline void CopyOut(int32_t progress) { AscendC::LocalTensor<dataType> zLocal = outQueueZ.DeQue<dataType>(); if ((progress == (this->tileNum * BUFFER_NUM - 2)) || (progress == (this->tileNum * BUFFER_NUM - 1))) { AscendC::DataCopy(zGm[(progress - 2) * this->tileLength + this->lastTileLength], zLocal, this->tileLength); } else { AscendC::DataCopy(zGm[progress * this->tileLength], zLocal, this->tileLength); } outQueueZ.FreeTensor(zLocal); } |