接口调用流程

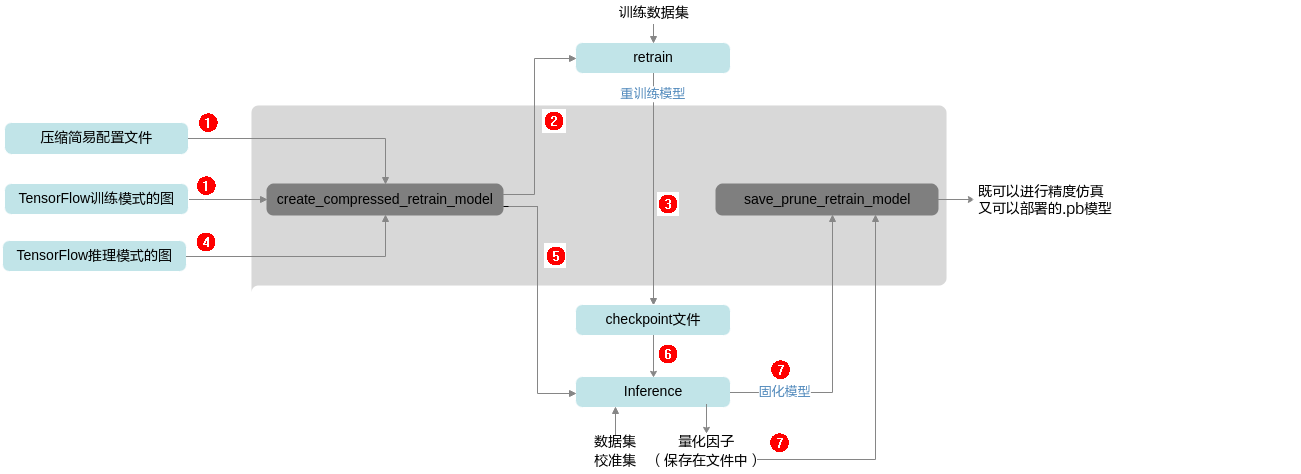

蓝色部分为用户实现,灰色部分为用户调用昇腾模型压缩工具提供的API实现,用户在TensorFlow原始网络推理的代码中导入库,并在特定的位置调用相应API,即可实现压缩功能:

- 调用压缩图修改接口create_compressed_retrain_model,根据简易配置文件与用户原始模型对训练的图进行压缩前的图结构修改,插入通道稀疏与量化感知训练的算子,生成用于组合压缩训练的图结构(对应图1中的序号1)。

- 调用自适应学习率优化器(RMSPropOptimizer)建立反向梯度图(该步骤需要在1后执行)。

optimizer = tf.compat.v1.train.RMSPropOptimizer( ARGS.learning_rate, momentum=ARGS.momentum) train_op = optimizer.minimize(loss) - 创建会话,进行模型的训练,并将训练后的参数保存为checkpoint文件(对应图1中的序号2,3)。

with tf.Session() as sess: sess.run(tf.compat.v1.global_variables_initializer()) sess.run(outputs) #将训练后的参数保存为checkpoint文件 saver_save.save(sess, retrain_ckpt, global_step=0) - 用户构建推理模式的图结构(BN的is_training参数为False),调用组合压缩接口图修改接口create_compressed_retrain_model,根据量化配置文件对推理的图进行压缩前的图结构修改,插入通道稀疏与量化感知训练的算子,用于后续模型固化与精度推理,同时生成记录稀疏信息和量化因子的record文件(对应图1中的序号4,5)。

- 创建会话,恢复训练参数,推理量化的输出节点(retrain_ops[-1]),将量化因子写入record文件,并将模型固化为pb模型(对应图1中的序号6,7)。

variables_to_restore = tf.compat.v1.global_variables() saver_restore = tf.compat.v1.train.Saver(variables_to_restore) with tf.Session() as sess: sess.run(tf.compat.v1.global_variables_initializer()) #恢复训练参数 saver_restore.restore(sess, retrain_ckpt) #将量化因子写入record文件,说明:如用户未使能任何量化功能则无需执行此步骤,直接执行下一步 sess.run(retrain_ops[-1]) #固化pb模型 constant_graph = tf.compat.v1.graph_util.convert_variables_to_constants( sess, eval_graph.as_graph_def(), [output.name[:-2] for output in outputs]) with tf.io.gfile.GFile(frozen_quant_eval_pb, 'wb') as f: f.write(constant_graph.SerializeToString()) - 调用压缩图保存接口save_compressed_retrain_model,根据稀疏和量化因子记录文件以及固化模型,导出既可在TensorFlow环境中进行精度仿真又可以昇腾AI处理器部署的模型(对应图1中的序号7)。

调用示例

- 如下示例标有“由用户补充处理”的步骤,需要用户根据自己的模型和数据集进行补充处理,示例中仅为示例代码。

- 调用昇腾模型压缩工具的部分,函数入参可以根据实际情况进行调整。量化感知训练基于用户的训练过程,请确保已经有基于TensorFlow环境进行训练的脚本,并且训练后的精度正常。

- 导入昇腾模型压缩工具包,设置日志级别。

import amct_tensorflow as amct amct.set_logging_level(print_level="info", save_level="info")

- (可选,由用户补充处理)创建图并读取训练好的参数,在TensorFlow环境下推理,验证环境、推理脚本是否正常。

推荐执行该步骤,以确保原始模型可以完成推理且精度正常;执行该步骤时,可以使用部分测试集,减少运行时间。

user_test_evaluate_model(evaluate_model, test_data)

- (由用户补充处理)创建训练图。

train_graph = user_load_train_graph()

- 调用昇腾模型压缩工具,执行组合压缩流程。

- 修改图,在图中插入稀疏和量化相关的算子。

record_file = './tmp/record.txt' retrain_ops = amct.create_compressed_retrain_model(graph=train_graph, config_defination=simple_cfg, outputs=user_model_outputs, record_file=record_file)

- (由用户补充处理)使用修改后的图,创建反向梯度,在训练集上做训练,训练模型参数与量化因子。

- 保存模型,删除插入的稀疏算子。

compressed_model_path = './result/user_model' amct.save_compressed_retrain_model(pb_model=trained_pb, outputs=user_model_outputs, record_file=record_file, save_path=compressed_model_path)

- 修改图,在图中插入稀疏和量化相关的算子。

- (可选,由用户补充处理)使用组合压缩后的模型user_model_compressed.pb和测试集,在TensorFlow的环境下推理,测试组合压缩后的仿真模型精度。

使用组合压缩后仿真模型精度与2中的原始精度做对比,可以观察组合压缩对精度的影响。

compressed_model = './results/user_model_compressed.pb' user_do_inference(compressed_model, test_data)