手把手教你玩转DeepSeek-R1量化模型

发表于 2025/02/25

模型支持及设备选型

|

模型名称 |

Atlas 300V |

Atlas 300I Duo |

Atlas 800I A2 |

|

DeepSeek V3 |

- |

- |

√ |

|

DeepSeek R1 |

- |

- |

√ |

|

DeepSeek Janus-Pro-1B/7B |

√ |

√ |

√ |

|

DeepSeek R1-Distill-Llama-70B |

- |

- |

√ |

|

DeepSeek R1-Distill-Llama-8B |

√ |

√ |

√ |

|

DeepSeek R1-Distill-Qwen-32B |

- |

- |

√ |

|

DeepSeek R1-Distill-Qwen-14B |

√ |

√ |

√ |

|

DeepSeek R1-Distill-Qwen-7B |

√ |

√ |

√ |

|

DeepSeek R1-Distill-Qwen-1.5B |

√ |

√ |

√ |

下面以Deepseek-R1为例,演示在如何获取量化模型及部署使用。

硬件要求

部署DeepSeek R1量化模型需要Atlas 800I A2(2*8*64GB)。本文以DeepSeek-R1为主进行介绍,DeepSeek-V3与R1的模型结构和参数量一致,部署方式与R1相同。

模型权重

1.1 权重下载

通过开源社区下载权重。

| Modelers | https://modelers.cn/models/State_Cloud/DeepSeek-R1-W8A8 |

|---|

1.2 按需W8A8量化 (BF16 to INT8)

如已下载了BF16模型,可采用以下步骤进行模型量化

Step1:安装ModelSlim

| git clone https://gitee.com/ascend/msit.git cd msit/msmodelslim bash install.sh |

Step2: 运行量化命令

| cd msit/msmodelslim/example/DeepSeek/ python3 quant_deepseek_w8a8.py \ --model_path {浮点权重路径} \ --save_path {W8A8量化权重路径} |

加载MindIE镜像

| 镜像链接 | https://www.hiascend.com/developer/ascendhub/detail/af85b724a7e5469ebd7ea13c3439d48f |

| 镜像版本 | 2.0.T3-800I-A2-py311-openeuler24.03-lts |

容器启动

容器启动命令:

|

container_name=$1 # 参数1:容器名称 image_id=$2 # 参数2:镜像ID model_path=$3 # 参数3:权重路径 docker run -itd --privileged --name=$container_name --net=host --shm-size=500g \ --device=/dev/davinci0 \ --device=/dev/davinci1 \ --device=/dev/davinci2 \ --device=/dev/davinci3 \ --device=/dev/davinci4 \ --device=/dev/davinci5 \ --device=/dev/davinci6 \ --device=/dev/davinci7 \ --device=/dev/davinci_manager \ --device=/dev/devmm_svm \ --device=/dev/hisi_hdc \ -v /usr/local/Ascend/driver:/usr/local/Ascend/driver \ -v /usr/local/Ascend/add-ons/:/usr/local/Ascend/add-ons/ \ -v /usr/local/sbin/:/usr/local/sbin/ \ -v /var/log/npu/slog/:/var/log/npu/slog \ -v /var/log/npu/profiling/:/var/log/npu/profiling \ -v /var/log/npu/dump/:/var/log/npu/dump \ -v /var/log/npu/:/usr/slog \ -v /etc/hccn.conf:/etc/hccn.conf \ -v $model_path:/model \ $image_id /bin/bash |

进入容器:

|

docker exec -it 容器名称 /bin/bash |

纯模型测试

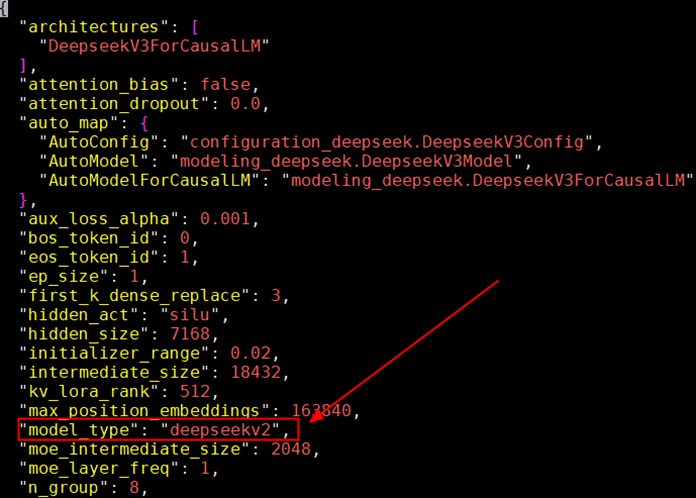

修改模型文件:

配置ranktable.json:

|

{ "server_count": "...", # 总节点数 # server_list中第一个server为主节点 "server_list": [ { "device": [ { "device_id": "...", # 当前卡的本机编号,取值范围[0, 本机卡数) "device_ip": "...", # 当前卡的ip地址,可通过hccn_tool命令获取 "rank_id": "..." # 当前卡的全局编号,取值范围[0, 总卡数) }, ... ], "server_id": "...", # 当前节点的ip地址 "container_ip": "..." # 容器ip地址(服务化部署时需要),若无特殊配置,则与server_id相同 }, ... ], "status": "completed", "version": "1.0" } |

添加加环境变量:

|

cd /usr/local/Ascend/atb-models/tests/modeltest/ source /usr/local/Ascend/mindie/set_env.sh source /usr/local/Ascend/ascend-toolkit/set_env.sh source /usr/local/Ascend/nnal/atb/set_env.sh source /usr/local/Ascend/atb-models/set_env.sh export ASCEND_RT_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 export ATB_LLM_BENCHMARK_ENABLE=1 export ATB_LLM_ENABLE_AUTO_TRANSPOSE=0 export MASTER_IP=主节点ip地址 export HCCL_CONNECT_TIMEOUT=7200 export HCCL_EXEC_TIMEOUT=0 export OMP_NUM_THREADS=1 # 关闭确定性计算,使能AIV export HCCL_DETERMINISTIC=false export HCCL_OP_EXPANSION_MODE="AIV" export PARALLEL_PARAMS=[dp,tp,moe_tp,moe_ep,pp,microbatch_size] #并行参数 |

性能测试:

|

bash run.sh pa_[数据类型] [数据集] ([Shots]) [Batch Size] [模型名] [模型权重路径] [ranktable文件路径] [总卡数] [节点数量] [rank_id起始值] $MASTER_IP $PARALLEL_PARAMS $PARALLEL_PARAMS |

例:

|

主节点 |

bash run.sh pa_fp16 performance [[256,256]] 64 1 deepseekv2 /Path/to/deepseek_r1_w8a8/ /Path/to/ranktable_2.json 16 2 0 $MASTER_IP $PARALLEL_PARAMS |

|

副节点 |

bash run.sh pa_fp16 performance [[256,256]] 64 1 deepseekv2 /Path/to/deepseek_r1_w8a8/ /Path/to/ranktable_2.json 16 2 8 $MASTER_IP $PARALLEL_PARAMS |

精度测试:

|

bash run.sh pa_[数据类型] [数据集] ([Shots]) [Batch Size] [模型名] [模型权重路径] [ranktable文件路径] [总卡数] [节点数量] [rank_id起始值] $MASTER_IP $PARALLEL_PARAMS $PARALLEL_PARAMS |

例:

|

主节点 |

bash run.sh pa_fp16 full_CEval 5 8 deepseekv3 /Path/to/deepseek_r1_w8a8/ /Path/to/ranktable_2.json 16 2 0 $MASTER_IP $PARALLEL_PARAMS |

|

副节点 |

bash run.sh pa_fp16 full_CEval 5 8 deepseekv2 /Path/to/deepseek_r1_w8a8/ /Path/to/ranktable_2.json 16 2 8 $MASTER_IP $PARALLEL_PARAMS |

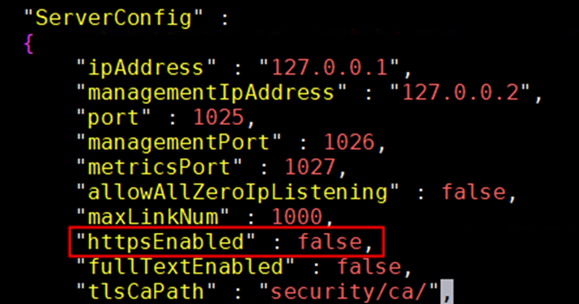

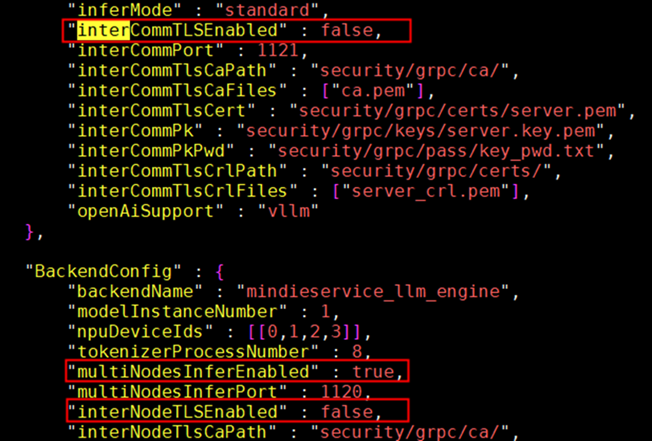

服务化测试

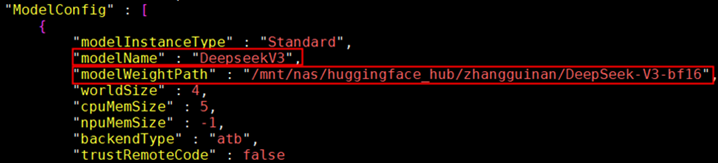

配置服务化参数:

|

"httpsEnabled" : false, "multiNodesInferEnabled" : true, # 开启多机推理 # 若不需要安全认证,则将以下两个参数设为false "interCommTLSEnabled" : false, "interNodeTLSEnabled" : false, "modelName" : "DeepseekV3" # 不影响服务化拉起 "modelWeightPath" : "权重路径", |

拉起服务:

|

cd /usr/local/Ascend/mindie/latest/mindie-service/ ./bin/mindieservice_daemon |

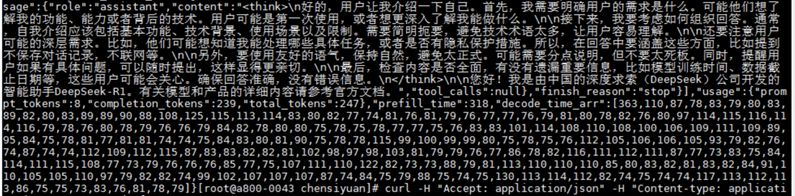

客户端测试(Deepseek-R1请求样例):

| 请求 | curl -X POST -d '{ "model":"DeepseekR1", "messages": [{ "role": "user", "content": "<think>\n介绍一下你自己" }], "stream": false }' http://XX.X.XX.XX:XXXX/v1/chat/completions |

| 结果 |  |

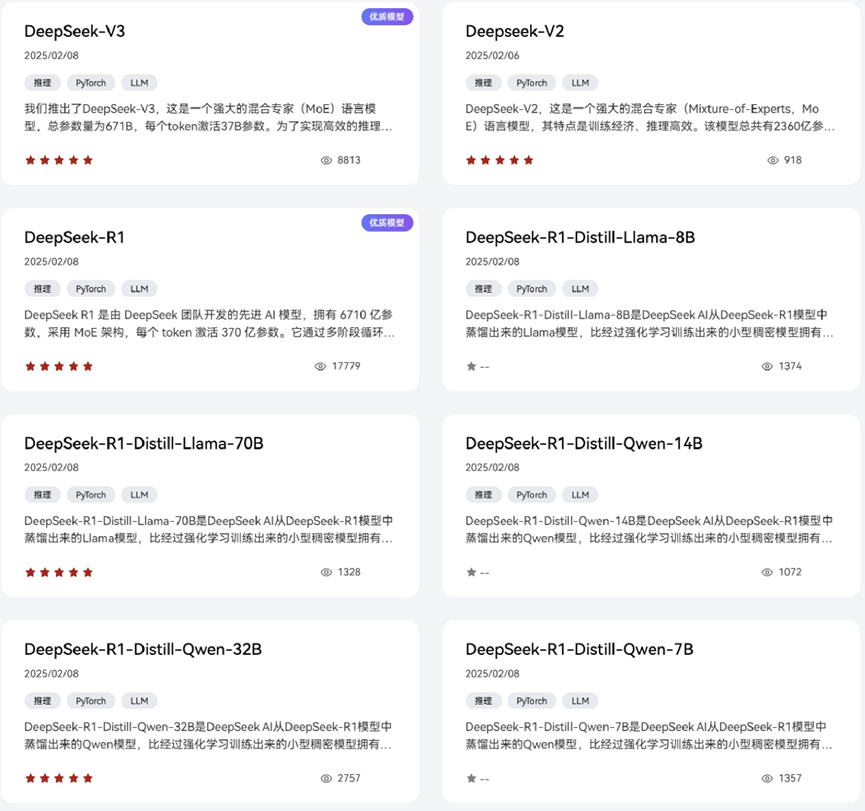

更多DeepSeek模型获取链接及使用指导可参考昇腾社区:

DeepSeek-R1

https://www.hiascend.com/software/modelzoo/models/detail/68457b8a51324310aad9a0f55c3e56e3

DeepSeek-V3:

https://www.hiascend.com/software/modelzoo/models/detail/678bdeb4e1a64c9dae51d353d84ddd15

DeepSeek-R1-Distill-Llama-70B

https://www.hiascend.com/software/modelzoo/models/detail/ee3f9897743a4341b43710f8d204733a

DeepSeek-R1-Distill-Qwen-7B

https://www.hiascend.com/software/modelzoo/models/detail/11aa2a48479d4d229a9830b8e41fc011