对Tensor节点进行集合通信计算

调用算子原型接口对要进行集合通信计算的tensor进行计算。

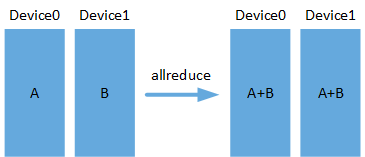

allreduce

allreduce:提供group内的集合通信allreduce功能,对所有节点的同名张量进行reduce操作,reduce操作由reduction参数指定。

#---------------------allreduce test(2 npu)--------------------------------- from npu_bridge.npu_init import * tensor = tf.random_uniform((1, 3), minval=1, maxval=10, dtype=tf.float32) allreduce_test = hccl_ops.allreduce(tensor , "sum")

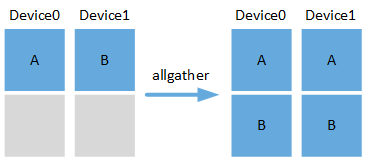

allgather

allgather:提供group内的集合通信allgather功能,将所有节点的输入Tensor合并起来。

#---------------------allgather test(2 npu)--------------------------------- from npu_bridge.npu_init import * cCon = tf.constant([1.0,2.0,3.0]) allgather_test = hccl_ops.allgather(cCon, 2) #---------- rank 0/1 allgather _test = [1.0, 2.0, 3.0, 1.0, 2.0, 3.0] ----------

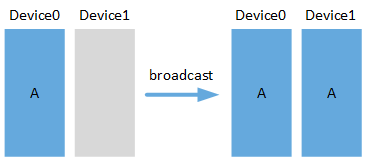

broadcast

broadcast:提供group内的集合通信broadcast功能,将root节点的数据广播到其他rank。

#---------------------broadcast test(2 npu)--------------------------------- from npu_bridge.npu_init import * cCon = tf.Variable([1.0,2.0,3.0]) input = [cCon] broadcast_test = hccl_ops.broadcast(input, 0) #---------------- rank 0/1 broadcast_test = [1.0, 2.0, 3.0] --------------------

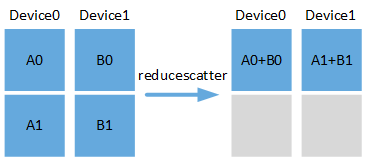

reducescatter

reduce_scatter:提供group内的集合通信reducescatter功能,reduce操作由reduction参数指定。

#---------------------reducescatter test(2 npu)----------------------------- from npu_bridge.npu_init import * cCon = tf.constant([1.0,2.0,3.0,4.0]) reducescatter_test = hccl_ops.reduce_scatter(cCon, "sum", 2) #-----------------rank 0 reducescatter _test = [2.0, 4.0] ---------------------- #-----------------rank 1 reducescatter _test = [6.0, 8.0] ----------------------

send

send:提供group内点对点通信发送数据的send功能。

#---------------------------------send test------------------------------------- from npu_bridge.npu_init import * sr_tag = 0 dest_rank = 1 hccl_ops.send(tensor, sr_tag, dest_rank)

receive

receive:提供group内点对点通信发送数据的receive功能。

#---------------------receive test(2 npu)----------------------------------- from npu_bridge.npu_init import * sr_tag = 0 src_rank = 0 tensor = hccl_ops.receive(tensor.shape, tensor.dtype, sr_tag, src_rank)

父主题: 集合通信接口使用指导