功能说明

该接口实现带转置的2D格式数据从A1/B1到A2/B2的加载。

下面通过示例来讲解接口功能和关键参数:下文图中一个N形或者一个Z形代表一个分形。

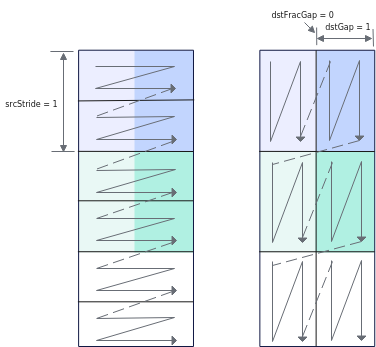

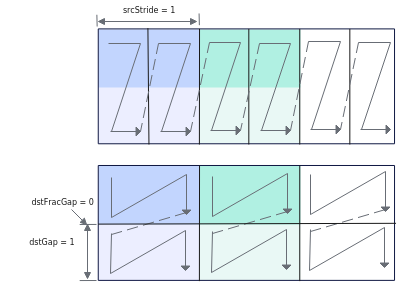

- 对于uint8_t/int8_t数据类型, 每次迭代处理32*32*1B数据,可处理2个分形(一个分形512B),每次迭代中,源操作数中2个连续的16*32分形将被合并为1个32*32的方块矩阵,基于方块矩阵做转置,转置后分裂为2个16*32分形,根据目的操作数分形间隔等参数可以有不同的排布。如下图示例:

- 因为每次迭代处理32*32*1B数据,需要3次迭代可以完成,repeatTimes = 3;

- srcStride = 1,表示相邻迭代间,源操作数前一个方块矩阵与后一个方块矩阵起始地址的间隔为1(单位:32*32*1B),这里的单位实际上是拼接后的方块矩阵的大小;

- dstGap = 1,表示相邻迭代间,目的操作数前一个迭代第一个分形的结束地址到下一个迭代第一个分形起始地址的间隔为1(单位:512B);

- dstFracGap = 0,表示每个迭代内目的操作数前一个分形的结束地址与后一个分形起始地址的间隔为0(单位:512B)。

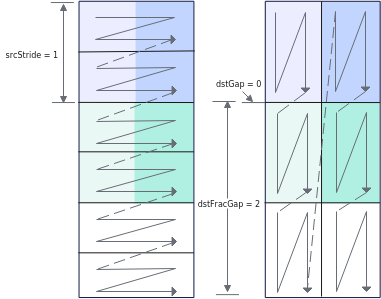

如下图示例:

- repeatTimes和srcStride的解释和上图示例一致。

- dstGap = 0,表示相邻迭代间,目的操作数前一个迭代第一个分形的结束地址和下一个迭代第一个分形起始地址无间隔。

- dstFracGap = 2,表示每个迭代内目的操作数前一个分形的结束地址与后一个分形起始地址的间隔为2(单位:512B)。

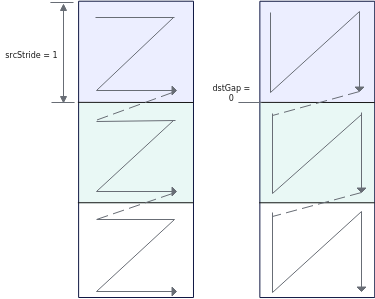

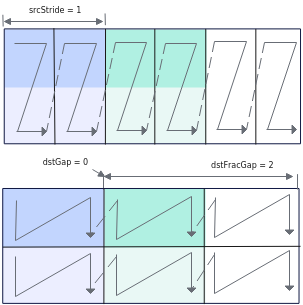

- 对于half/bfloat16_t数据类型, 每次迭代处理16*16*2B数据,可处理1个分形(一个分形512B),每次迭代中,源操作数中1个16*16分形将被转置。

- 因为每次迭代处理16*16*2B数据,需要3次迭代可以完成,repeatTimes = 3;

- srcStride = 1,表示相邻迭代间,源操作数前一个方块矩阵与后一个方块矩阵起始地址的间隔为1 (单位:16*16*2B);

- dstGap = 0,表示相邻迭代间,目的操作数前一个迭代第一个分形的结束地址到下一个迭代第一个分形起始地址无间隔;

- 该场景下,因为其分形即为方块矩阵,每个迭代处理一个分形,不存在迭代内分形的间隔,该参数设置无效。

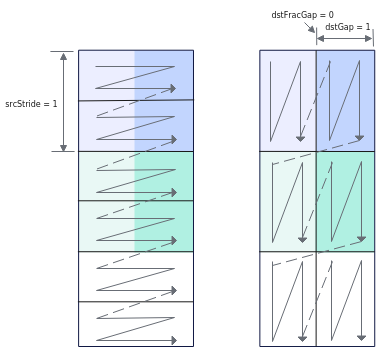

- 对于float/int32_t/uint32_t数据类型, 每次迭代处理16*16*4B数据,可处理2个分形(一个分形512B),每次迭代中,源操作数2个连续的16*8分形将被合并为1个16*16的方块矩阵,基于方块矩阵做转置,转置后分裂为2个16*8分形,根据目的操作数分形间隔等参数可以有不同的排布。如下图示例:

- 因为每次迭代处理16*16*4B数据,需要3次迭代可以完成,repeatTimes = 3;

- srcStride = 1,表示相邻迭代间,源操作数前一个方块矩阵与后一个方块矩阵起始地址的间隔为1(单位:16*16*4B),这里的单位实际上是拼接后的方块矩阵的大小;

- dstGap = 1,表示相邻迭代间,目的操作数前一个迭代第一个分形的结束地址到下一个迭代第一个分形起始地址的间隔为1(单位:512B);

- dstFracGap = 0,表示每个迭代内目的操作数前一个分形结束地址与后一个分形起始地址的间隔为0(单位:512B)。

如下图示例:

- repeatTimes和srcStride的解释和上图示例一致。

- dstGap = 0,表示相邻迭代间,目的操作数前一个迭代第一个分形的结束地址和下一个迭代第一个分形起始地址无间隔。

- dstFracGap = 2,表示每个迭代内目的操作数前一个分形结束地址与后一个分形起始地址的间隔为2(单位:512B)。

- 对于int4b_t数据类型, 每次迭代处理64*64*0.5B数据,可处理4个分形(一个分形512B),每次迭代中,源操作数中4个连续的16*64分形将被合并为1个64*64的方块矩阵,基于方块矩阵做转置,转置后分裂为4个16*64分形,根据目的操作数分形间隔等参数可以有不同的排布。

如下图示例:

- 因为每次迭代处理64*64*0.5B数据,需要3次迭代可以完成,repeatTimes = 3;

- srcStride = 1,表示相邻迭代间,源操作数前一个方块矩阵与后一个方块矩阵起始地址的间隔为1(单位:64*64*0.5B),这里的单位实际上是拼接后的方块矩阵的大小;

- dstGap = 1,表示相邻迭代间,目的操作数前一个迭代第一个分形的结束地址到下一个迭代第一个分形起始地址的间隔为1(单位:512B);

- dstFracGap = 0,表示每个迭代内目的操作数前一个分形的结束地址与后一个分形起始地址的间隔为0(单位:512B)。

如下图示例:

- repeatTimes和srcStride的解释和上图示例一致。

- dstGap = 0,表示相邻迭代间,目的操作数前一个迭代第一个分形的结束地址和下一个迭代第一个分形起始地址无间隔。

- dstFracGap = 2,表示每个迭代内目的操作数前一个分形的结束地址与后一个分形起始地址的间隔为2(单位:512B)。

函数原型

1 2 | template <typename T> __aicore__ inline void LoadDataWithTranspose(const LocalTensor<T>& dstLocal, const LocalTensor<T>& srcLocal, const LoadData2dTransposeParams& loadDataParams) |

参数说明

参数名称 |

输入/输出 |

含义 |

||

|---|---|---|---|---|

dstLocal |

输出 |

目的操作数,结果矩阵,类型为LocalTensor,支持的TPosition为A2/B2。 LocalTensor的起始地址需要保证512字节对齐。 Atlas A2训练系列产品/Atlas 800I A2推理产品,支持的数据类型为:half/bfloat16_t/float/int32_t/uint32_t/uint8_t/int8_t。 Atlas 200I/500 A2推理产品,支持的数据类型为:uint8_t/int8_t/uint16_t/int16_t/half/bfloat16_t/uint32_t/int32_t/float。 当TPosition为B2时,额外支持int4b_t数据类型。 数据类型和srcLocal的数据类型保持一致。 |

||

srcLocal |

输入 |

源操作数,类型为LocalTensor,支持的TPosition为A1/B1。 LocalTensor的起始地址需要保证32字节对齐。 Atlas A2训练系列产品/Atlas 800I A2推理产品,支持的数据类型为:half/bfloat16_t/float/int32_t/uint32_t/uint8_t/int8_t。 Atlas 200I/500 A2推理产品,支持的数据类型为:uint8_t/int8_t/uint16_t/int16_t/half/bfloat16_t/uint32_t/int32_t/float。 当TPosition为B1时,额外支持int4b_t数据类型。 数据类型和dstLocal的数据类型保持一致。 |

||

LoadData2dTransposeParams |

输入 |

LoadDataWithTranspose相关参数,类型为LoadData2dTransposeParams,结构体具体定义为:

参数说明请参考表2。 |

参数名称 |

输入/输出 |

含义 |

|---|---|---|

startIndex |

输入 |

方块矩阵 ID,搬运起始位置为源操作数中第几个方块矩阵(0 为源操作数中第1个方块矩阵)。取值范围:startIndex∈[0, 65535] 。默认为0。 例如,源操作数中有20个大小为16*8*4B的分形(数据类型为float),startIndex=1表示搬运起始位置为第2个方块矩阵,即将第3和第4个分形从源操作数中转置到目的操作数中(第1、2个分形组成第1个方块矩阵,第3、4个分形组成第2个方块矩阵)。 |

repeatTimes |

输入 |

迭代次数。 对于uint8_t/int8_t数据类型,每次迭代处理32*32*1B数据; 对于half/bfloat16_t数据类型,每次迭代处理16*16*2B数据; 对于float/int32_t/uint32_t数据类型,每次迭代处理16*16*4B数据。 对于int4b_t数据类型,每次迭代处理16*64*0.5B数据。 取值范围:repeatTimes∈[1, 255]。 |

srcStride |

输入 |

相邻迭代间,源操作数前一个分形与后一个分形起始地址的间隔。这里的单位实际上是拼接后的方块矩阵的大小。 对于uint8_t/int8_t数据类型,单位是32*32*1B; 对于half/bfloat16_t数据类型,单位是16*16*2B; 对于float/int32_t/uint32_t数据类型,单位是16*16*4B。 对于int4b_t数据类型,每次迭代处理16*64*0.5B数据。 取值范围:srcStride∈[0, 65535]。默认为0。 |

dstGap |

输入 |

相邻迭代间,目的操作数前一个迭代第一个分形的结束地址到下一个迭代第一个分形起始地址的间隔,单位:512B。取值范围:dstGap∈[0, 65535]。默认为0。 |

dstFracGap |

输入 |

每个迭代内目的操作数转置前一个分形结束地址与后一个分形起始地址的间隔,单位为512B,仅在数据类型为float/int32_t/uint32_t/uint8_t/int8_t/int4b_t时有效。 |

注意事项

- repeat=0表示不执行搬运操作。

- 开发者需要保证目的操作数转置后的分形没有重叠。

- 操作数地址偏移对齐要求请参见通用约束。

支持的型号

Atlas A2训练系列产品/Atlas 800I A2推理产品

Atlas 200I/500 A2推理产品

调用示例

- 示例1:该示例输入a矩阵为int8_t类型,shape为[16,32],输入b矩阵为int8_t类型,shape为[32,64],输出c的类型为int32_t。a矩阵从A1->A2不转置,b矩阵从B1->B2转置,之后进行Mmad计算和Fixpipe计算。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152

#include "kernel_operator.h" template <typename dst_T, typename fmap_T, typename weight_T, typename dstCO1_T> class KernelMatmul { public: __aicore__ inline KernelMatmul() { aSize = m * k; bSize = k * n; cSize = m * n; nBlocks = n / 16; } __aicore__ inline void Init(__gm__ uint8_t *a, __gm__ uint8_t *b, __gm__ uint8_t *c) { aGM.SetGlobalBuffer((__gm__ fmap_T *)a); bGM.SetGlobalBuffer((__gm__ weight_T *)b); cGM.SetGlobalBuffer((__gm__ dstCO1_T *)c); pipe.InitBuffer(inQueueA1, 1, aSize * sizeof(fmap_T)); pipe.InitBuffer(inQueueA2, 1, aSize * sizeof(fmap_T)); pipe.InitBuffer(inQueueB1, 1, bSize * sizeof(weight_T)); pipe.InitBuffer(inQueueB2, 2, bSize * sizeof(weight_T)); pipe.InitBuffer(outQueueCO1, 1, cSize * sizeof(dstCO1_T)); } __aicore__ inline void Process() { CopyIn(); SplitA(); SplitB(); Compute(); CopyOut(); } private: __aicore__ inline void CopyIn() { AscendC::LocalTensor<fmap_T> a1Local = inQueueA1.AllocTensor<fmap_T>(); AscendC::LocalTensor<weight_T> b1Local = inQueueB1.AllocTensor<weight_T>(); AscendC::Nd2NzParams dataCopyA1Params; dataCopyA1Params.ndNum = 1; dataCopyA1Params.nValue = m; dataCopyA1Params.dValue = k; dataCopyA1Params.srcNdMatrixStride = 0; dataCopyA1Params.srcDValue = k; dataCopyA1Params.dstNzC0Stride = m; dataCopyA1Params.dstNzNStride = 1; dataCopyA1Params.dstNzMatrixStride = 0; AscendC::DataCopy(a1Local, aGM, dataCopyA1Params); AscendC::Nd2NzParams dataCopyB1Params; dataCopyB1Params.ndNum = 1; dataCopyB1Params.nValue = k; dataCopyB1Params.dValue = n; dataCopyB1Params.srcNdMatrixStride = 0; dataCopyB1Params.srcDValue = n; dataCopyB1Params.dstNzC0Stride = k; dataCopyB1Params.dstNzNStride = 1; dataCopyB1Params.dstNzMatrixStride = 0; AscendC::DataCopy(b1Local, bGM, dataCopyB1Params); inQueueA1.EnQue(a1Local); inQueueB1.EnQue(b1Local); } __aicore__ inline void SplitA() { AscendC::LocalTensor<fmap_T> a1Local = inQueueA1.DeQue<fmap_T>(); AscendC::LocalTensor<fmap_T> a2Local = inQueueA2.AllocTensor<fmap_T>(); AscendC::LoadData2DParams loadL0AParams; loadL0AParams.repeatTimes = aSize * sizeof(fmap_T) / 512; loadL0AParams.srcStride = 1; loadL0AParams.ifTranspose = false; AscendC::LoadData(a2Local, a1Local, loadL0AParams); inQueueA2.EnQue<fmap_T>(a2Local); inQueueA1.FreeTensor(a1Local); } __aicore__ inline void SplitB() { AscendC::LocalTensor<weight_T> b1Local = inQueueB1.DeQue<weight_T>(); AscendC::LocalTensor<weight_T> b2Local = inQueueB2.AllocTensor<weight_T>(); AscendC::LoadData2dTransposeParams loadDataParams; loadDataParams.startIndex = 0; nBlockSize = 32; loadDataParams.repeatTimes = n / nBlockSize; loadDataParams.srcStride = 1; loadDataParams.dstGap = 1; loadDataParams.dstFracGap = 0; AscendC::LoadDataWithTranspose(b2Local, b1Local, loadDataParams); inQueueB1.FreeTensor(b1Local); inQueueB2.EnQue<weight_T>(b2Local); } __aicore__ inline void Compute() { AscendC::LocalTensor<fmap_T> a2Local = inQueueA2.DeQue<fmap_T>(); AscendC::LocalTensor<weight_T> b2Local = inQueueB2.DeQue<weight_T>(); AscendC::LocalTensor<dstCO1_T> c1Local = outQueueCO1.AllocTensor<dstCO1_T>(); AscendC::MmadParams mmadParams; mmadParams.m = m; mmadParams.n = n; mmadParams.k = k; AscendC::Mmad(c1Local, a2Local, b2Local, mmadParams); outQueueCO1.EnQue<dstCO1_T>(c1Local); inQueueA2.FreeTensor(a2Local); inQueueB2.FreeTensor(b2Local); } __aicore__ inline void CopyOut() { AscendC::LocalTensor<dstCO1_T> c1Local = outQueueCO1.DeQue<dstCO1_T>(); AscendC::FixpipeParamsV220 fixpipeParams; fixpipeParams.nSize = n; fixpipeParams.mSize = m; fixpipeParams.srcStride = m; fixpipeParams.dstStride = n; fixpipeParams.ndNum = 1; fixpipeParams.srcNdStride = 0; fixpipeParams.dstNdStride = 0; AscendC::Fixpipe(cGM, c1Local, fixpipeParams); outQueueCO1.FreeTensor(c1Local); } private: AscendC::TPipe pipe; AscendC::TQue<AscendC::QuePosition::A1, 1> inQueueA1; AscendC::TQue<AscendC::QuePosition::A2, 1> inQueueA2; AscendC::TQue<AscendC::QuePosition::B1, 1> inQueueB1; AscendC::TQue<AscendC::QuePosition::B2, 1> inQueueB2; // dst queue AscendC::TQue<AscendC::QuePosition::CO1, 1> outQueueCO1; AscendC::GlobalTensor<fmap_T> aGM; AscendC::GlobalTensor<weight_T> bGM; AscendC::GlobalTensor<dst_T> cGM; uint16_t m = 16, k = 32, n = 64; uint8_t nBlockSize = 16; uint16_t c0Size = 16; uint16_t aSize, bSize, cSize, nBlocks; }; extern "C" __global__ __aicore__ void cube_matmul_loaddata_operator_int8_t(__gm__ uint8_t *a, __gm__ uint8_t *b, __gm__ uint8_t *c) { KernelMatmul<dst_type, fmap_type, weight_type, dstCO1_type> op; op.Init(a, b, c); op.Process(); }

- 示例2:该示例输入a矩阵为half类型,shape为[16,32],输入b矩阵为half类型,shape为[32,32],输出c的类型为float。a矩阵从A1->A2不转置,b矩阵从B1->B2转置,之后进行Mmad计算和Fixpipe计算。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153

#include "kernel_operator.h" template <typename dst_T, typename fmap_T, typename weight_T, typename dstCO1_T> class KernelMatmul { public: __aicore__ inline KernelMatmul() { aSize = m * k; bSize = k * n; cSize = m * n; nBlocks = n / 16; } __aicore__ inline void Init(__gm__ uint8_t *a, __gm__ uint8_t *b, __gm__ uint8_t *c) { aGM.SetGlobalBuffer((__gm__ fmap_T *)a); bGM.SetGlobalBuffer((__gm__ weight_T *)b); cGM.SetGlobalBuffer((__gm__ dstCO1_T *)c); pipe.InitBuffer(inQueueA1, 1, aSize * sizeof(fmap_T)); pipe.InitBuffer(inQueueA2, 1, aSize * sizeof(fmap_T)); pipe.InitBuffer(inQueueB1, 1, bSize * sizeof(weight_T)); pipe.InitBuffer(inQueueB2, 2, bSize * sizeof(weight_T)); pipe.InitBuffer(outQueueCO1, 1, cSize * sizeof(dstCO1_T)); } __aicore__ inline void Process() { CopyIn(); SplitA(); SplitB(); Compute(); CopyOut(); } private: __aicore__ inline void CopyIn() { AscendC::LocalTensor<fmap_T> a1Local = inQueueA1.AllocTensor<fmap_T>(); AscendC::LocalTensor<weight_T> b1Local = inQueueB1.AllocTensor<weight_T>(); AscendC::Nd2NzParams dataCopyA1Params; dataCopyA1Params.ndNum = 1; dataCopyA1Params.nValue = m; dataCopyA1Params.dValue = k; dataCopyA1Params.srcNdMatrixStride = 0; dataCopyA1Params.srcDValue = k; dataCopyA1Params.dstNzC0Stride = m; dataCopyA1Params.dstNzNStride = 1; dataCopyA1Params.dstNzMatrixStride = 0; AscendC::DataCopy(a1Local, aGM, dataCopyA1Params); AscendC::Nd2NzParams dataCopyB1Params; dataCopyB1Params.ndNum = 1; dataCopyB1Params.nValue = k; dataCopyB1Params.dValue = n; dataCopyB1Params.srcNdMatrixStride = 0; dataCopyB1Params.srcDValue = n; dataCopyB1Params.dstNzC0Stride = k; dataCopyB1Params.dstNzNStride = 1; dataCopyB1Params.dstNzMatrixStride = 0; AscendC::DataCopy(b1Local, bGM, dataCopyB1Params); inQueueA1.EnQue(a1Local); inQueueB1.EnQue(b1Local); } __aicore__ inline void SplitA() { AscendC::LocalTensor<fmap_T> a1Local = inQueueA1.DeQue<fmap_T>(); AscendC::LocalTensor<fmap_T> a2Local = inQueueA2.AllocTensor<fmap_T>(); AscendC::LoadData2DParams loadL0AParams; loadL0AParams.repeatTimes = aSize * sizeof(fmap_T) / 512; loadL0AParams.srcStride = 1; loadL0AParams.ifTranspose = false; AscendC::LoadData(a2Local, a1Local, loadL0AParams); inQueueA2.EnQue<fmap_T>(a2Local); inQueueA1.FreeTensor(a1Local); } __aicore__ inline void SplitB() { AscendC::LocalTensor<weight_T> b1Local = inQueueB1.DeQue<weight_T>(); AscendC::LocalTensor<weight_T> b2Local = inQueueB2.AllocTensor<weight_T>(); AscendC::LoadData2dTransposeParams loadDataParams; loadDataParams.startIndex = 0; nBlockSize = 16; loadDataParams.repeatTimes = k / nBlockSize; loadDataParams.srcStride = 1; loadDataParams.dstGap = 1; for (int i = 0; i < (n / nBlockSize); ++i) { AscendC::LoadDataWithTranspose(b2Local[i * 16 * nBlockSize], b1Local[i * k * nBlockSize], loadDataParams); } inQueueB1.FreeTensor(b1Local); inQueueB2.EnQue<weight_T>(b2Local); } __aicore__ inline void Compute() { AscendC::LocalTensor<fmap_T> a2Local = inQueueA2.DeQue<fmap_T>(); AscendC::LocalTensor<weight_T> b2Local = inQueueB2.DeQue<weight_T>(); AscendC::LocalTensor<dstCO1_T> c1Local = outQueueCO1.AllocTensor<dstCO1_T>(); AscendC::MmadParams mmadParams; mmadParams.m = m; mmadParams.n = n; mmadParams.k = k; AscendC::Mmad(c1Local, a2Local, b2Local, mmadParams); outQueueCO1.EnQue<dstCO1_T>(c1Local); inQueueA2.FreeTensor(a2Local); inQueueB2.FreeTensor(b2Local); } __aicore__ inline void CopyOut() { AscendC::LocalTensor<dstCO1_T> c1Local = outQueueCO1.DeQue<dstCO1_T>(); AscendC::FixpipeParamsV220 fixpipeParams; fixpipeParams.nSize = n; fixpipeParams.mSize = m; fixpipeParams.srcStride = m; fixpipeParams.dstStride = n; fixpipeParams.ndNum = 1; fixpipeParams.srcNdStride = 0; fixpipeParams.dstNdStride = 0; AscendC::Fixpipe(cGM, c1Local, fixpipeParams); outQueueCO1.FreeTensor(c1Local); } private: AscendC::TPipe pipe; AscendC::TQue<AscendC::QuePosition::A1, 1> inQueueA1; AscendC::TQue<AscendC::QuePosition::A2, 1> inQueueA2; AscendC::TQue<AscendC::QuePosition::B1, 1> inQueueB1; AscendC::TQue<AscendC::QuePosition::B2, 1> inQueueB2; // dst queue AscendC::TQue<AscendC::QuePosition::CO1, 1> outQueueCO1; AscendC::GlobalTensor<fmap_T> aGM; AscendC::GlobalTensor<weight_T> bGM; AscendC::GlobalTensor<dst_T> cGM; uint16_t m = 16, k = 32, n = 32; uint8_t nBlockSize = 16; uint16_t c0Size = 16; uint16_t aSize, bSize, cSize, nBlocks; }; extern "C" __global__ __aicore__ void cube_matmul_loaddata_operator_half(__gm__ uint8_t *a, __gm__ uint8_t *b, __gm__ uint8_t *c) { KernelMatmul<dst_type, fmap_type, weight_type, dstCO1_type> op; op.Init(a, b, c); op.Process(); }

- 示例3:该示例输入a矩阵为float类型,shape为[16,16],输入b矩阵为float类型,shape为[16,32],输出c的类型为float。a矩阵从A1->A2不转置,b矩阵从B1->B2转置,之后进行Mmad计算和Fixpipe计算。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152

#include "kernel_operator.h" template <typename dst_T, typename fmap_T, typename weight_T, typename dstCO1_T> class KernelMatmul { public: __aicore__ inline KernelMatmul() { aSize = m * k; bSize = k * n; cSize = m * n; nBlocks = n / 16; } __aicore__ inline void Init(__gm__ uint8_t *a, __gm__ uint8_t *b, __gm__ uint8_t *c) { aGM.SetGlobalBuffer((__gm__ fmap_T *)a); bGM.SetGlobalBuffer((__gm__ weight_T *)b); cGM.SetGlobalBuffer((__gm__ dstCO1_T *)c); pipe.InitBuffer(inQueueA1, 1, aSize * sizeof(fmap_T)); pipe.InitBuffer(inQueueA2, 1, aSize * sizeof(fmap_T)); pipe.InitBuffer(inQueueB1, 1, bSize * sizeof(weight_T)); pipe.InitBuffer(inQueueB2, 2, bSize * sizeof(weight_T)); pipe.InitBuffer(outQueueCO1, 1, cSize * sizeof(dstCO1_T)); } __aicore__ inline void Process() { CopyIn(); SplitA(); SplitB(); Compute(); CopyOut(); } private: __aicore__ inline void CopyIn() { AscendC::LocalTensor<fmap_T> a1Local = inQueueA1.AllocTensor<fmap_T>(); AscendC::LocalTensor<weight_T> b1Local = inQueueB1.AllocTensor<weight_T>(); AscendC::Nd2NzParams dataCopyA1Params; dataCopyA1Params.ndNum = 1; dataCopyA1Params.nValue = m; dataCopyA1Params.dValue = k; dataCopyA1Params.srcNdMatrixStride = 0; dataCopyA1Params.srcDValue = k; dataCopyA1Params.dstNzC0Stride = m; dataCopyA1Params.dstNzNStride = 1; dataCopyA1Params.dstNzMatrixStride = 0; AscendC::DataCopy(a1Local, aGM, dataCopyA1Params); AscendC::Nd2NzParams dataCopyB1Params; dataCopyB1Params.ndNum = 1; dataCopyB1Params.nValue = k; dataCopyB1Params.dValue = n; dataCopyB1Params.srcNdMatrixStride = 0; dataCopyB1Params.srcDValue = n; dataCopyB1Params.dstNzC0Stride = k; dataCopyB1Params.dstNzNStride = 1; dataCopyB1Params.dstNzMatrixStride = 0; AscendC::DataCopy(b1Local, bGM, dataCopyB1Params); inQueueA1.EnQue(a1Local); inQueueB1.EnQue(b1Local); } __aicore__ inline void SplitA() { AscendC::LocalTensor<fmap_T> a1Local = inQueueA1.DeQue<fmap_T>(); AscendC::LocalTensor<fmap_T> a2Local = inQueueA2.AllocTensor<fmap_T>(); AscendC::LoadData2DParams loadL0AParams; loadL0AParams.repeatTimes = aSize * sizeof(fmap_T) / 512; loadL0AParams.srcStride = 1; loadL0AParams.ifTranspose = false; AscendC::LoadData(a2Local, a1Local, loadL0AParams); inQueueA2.EnQue<fmap_T>(a2Local); inQueueA1.FreeTensor(a1Local); } __aicore__ inline void SplitB() { AscendC::LocalTensor<weight_T> b1Local = inQueueB1.DeQue<weight_T>(); AscendC::LocalTensor<weight_T> b2Local = inQueueB2.AllocTensor<weight_T>(); AscendC::LoadData2dTransposeParams loadDataParams; loadDataParams.startIndex = 0; nBlockSize = 16; loadDataParams.repeatTimes = n / nBlockSize; loadDataParams.srcStride = 1; loadDataParams.dstGap = 0; loadDataParams.dstFracGap = n / nBlockSize - 1; AscendC::LoadDataWithTranspose(b2Local, b1Local, loadDataParams); inQueueB1.FreeTensor(b1Local); inQueueB2.EnQue<weight_T>(b2Local); } __aicore__ inline void Compute() { AscendC::LocalTensor<fmap_T> a2Local = inQueueA2.DeQue<fmap_T>(); AscendC::LocalTensor<weight_T> b2Local = inQueueB2.DeQue<weight_T>(); AscendC::LocalTensor<dstCO1_T> c1Local = outQueueCO1.AllocTensor<dstCO1_T>(); AscendC::MmadParams mmadParams; mmadParams.m = m; mmadParams.n = n; mmadParams.k = k; AscendC::Mmad(c1Local, a2Local, b2Local, mmadParams); outQueueCO1.EnQue<dstCO1_T>(c1Local); inQueueA2.FreeTensor(a2Local); inQueueB2.FreeTensor(b2Local); } __aicore__ inline void CopyOut() { AscendC::LocalTensor<dstCO1_T> c1Local = outQueueCO1.DeQue<dstCO1_T>(); AscendC::FixpipeParamsV220 fixpipeParams; fixpipeParams.nSize = n; fixpipeParams.mSize = m; fixpipeParams.srcStride = m; fixpipeParams.dstStride = n; fixpipeParams.ndNum = 1; fixpipeParams.srcNdStride = 0; fixpipeParams.dstNdStride = 0; AscendC::Fixpipe(cGM, c1Local, fixpipeParams); outQueueCO1.FreeTensor(c1Local); } private: AscendC::TPipe pipe; AscendC::TQue<AscendC::QuePosition::A1, 1> inQueueA1; AscendC::TQue<AscendC::QuePosition::A2, 1> inQueueA2; AscendC::TQue<AscendC::QuePosition::B1, 1> inQueueB1; AscendC::TQue<AscendC::QuePosition::B2, 1> inQueueB2; // dst queue AscendC::TQue<AscendC::QuePosition::CO1, 1> outQueueCO1; AscendC::GlobalTensor<fmap_T> aGM; AscendC::GlobalTensor<weight_T> bGM; AscendC::GlobalTensor<dst_T> cGM; uint16_t m = 16, k = 16, n = 32; uint8_t nBlockSize = 16; uint16_t c0Size = 16; uint16_t aSize, bSize, cSize, nBlocks; }; extern "C" __global__ __aicore__ void cube_matmul_loaddata_operator_float(__gm__ uint8_t *a, __gm__ uint8_t *b, __gm__ uint8_t *c) { KernelMatmul<dst_type, fmap_type, weight_type, dstCO1_type> op; op.Init(a, b, c); op.Process(); }