Atlas 训练系列产品支持该算子。

Atlas A2训练系列产品支持该算子。

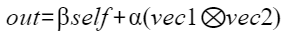

接口原型

- aclnnAddr和aclnnInplaceAddr实现相同的功能,其使用区别如下,请根据自身实际场景选择合适的算子。

- aclnnAddr:需新建一个输出张量对象存储计算结果。

- aclnnInplaceAddr:无需新建输出张量对象,直接在输入张量的内存中存储计算结果。

- 每个算子分为两段接口,必须先调用“aclnnXxxGetWorkspaceSize”接口获取入参并根据计算流程计算所需workspace大小,再调用“aclnnXxx”接口执行计算。

- aclnnAddr两段式接口如下:

- 第一段接口:aclnnStatus aclnnAddrGetWorkspaceSize(const aclTensor* self, const aclTensor* vec1, const aclTensor* vec2, const aclScalar* betaOptional, aclScalar* alphaOptional, aclTensor *out, uint64_t* workspaceSize, aclOpExecutor** executor)

- 第二段接口:aclnnstatus aclnnAddr(void *workspace, uint64_t workspaceSize, aclOpExecutor *executor, const aclrtStream stream)

- aclnnInplaceAddr两段式接口如下:

- 第一段接口:aclnnStatus aclnnInplaceAddrGetWorkspaceSize(aclTensor* selfRef, const aclTensor* vec1, const aclTensor* vec2, const aclScalar* betaOptional, aclScalar* alphaOptional, uint64_t* workspaceSize, aclOpExecutor** executor)

- 第二段接口:aclnnstatus aclnnInplaceAddr(void *workspace, uint64_t workspaceSize, aclOpExecutor *executor, const aclrtStream stream)

aclnnAddrGetWorkspaceSize

- 接口定义:

aclnnStatus aclnnAddrGetWorkspaceSize(const aclTensor* self, const aclTensor* vec1, const aclTensor* vec2, const aclScalar* betaOptional, aclScalar* alphaOptional, aclTensor *out, uint64_t* workspaceSize, aclOpExecutor** executor)

- 参数说明:

- self:外积扩展矩阵,Device侧的aclTensor,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL,shape需要与vec1、vec2满足broadcast关系,支持非连续的Tensor,数据格式支持ND。

- vec1:外积入参第一向量,Device侧的aclTensor,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL,shape需要与self满足broadcast关系,支持非连续的Tensor,数据格式支持ND。

- vec2:外积入参第二向量,Device侧的aclTensor,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL,shape需要与self满足broadcast关系,支持非连续的Tensor,数据格式支持ND。

- betaOptional:外积扩展矩阵比例因子,Host侧的aclScalar,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL。如果betaOptional为BOOL类型,则self、vec1、vec2可转换的最大类型为BOOL。数据格式支持ND。

- alphaOptional:外积比例因子,Host侧的aclScalar,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL。如果alphaOptional为BOOL类型,则self、vec1、vec2可转换的最大类型为BOOL。数据格式支持ND。

- out:输出结果,Device侧的aclTensor,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL,支持非连续的Tensor,数据格式支持ND。

- workspaceSize:返回用户需要在Device侧申请的workspace大小。

- executor:返回op执行器,包含了算子计算流程。

- 返回值:

返回aclnnStatus状态码,具体参见aclnn返回码。

第一段接口完成入参校验,出现以下场景时报错:

- 返回161001(ACLNN_ERR_PARAM_NULLPTR):传入的self、vec1、vec2或out是空指针。

- 返回161002(ACLNN_ERR_PARAM_INVALID):

- self、vec1和vec2的数据类型和数据格式不在支持的范围之内。

- vec1和vec2维度不为1,self维度超过2。

- self不能扩展成vec1和vec2外积结果形状。

- betaOptional或者alphaOptional为BOOL类型时,self、vec1、vec2最大类型为非BOOL类型。

- self、vec1、vec2类型都为整型时, betaOptional或者alphaOptional为浮点型。

aclnnAddr

- 接口定义:

aclnnstatus aclnnAddr(void *workspace, uint64_t workspaceSize, aclOpExecutor *executor, const aclrtStream stream)

- 参数说明:

- workspace:在Device侧申请的workspace内存起址。

- workspaceSize:在Device侧申请的workspace大小,由第一段接口aclnnAddrGetWorkspaceSize获取。

- executor:op执行器,包含了算子计算流程。

- stream:指定执行任务的AscendCL stream流。

- 返回值:

返回aclnnStatus状态码,具体参见aclnn返回码。

aclnnInplaceAddrGetWorkspaceSize

- 接口定义:

aclnnStatus aclnnInplaceAddrGetWorkspaceSize(aclTensor* selfRef, const aclTensor* vec1, const aclTensor* vec2, const aclScalar* betaOptional, aclScalar* alphaOptional, uint64_t* workspaceSize, aclOpExecutor** executor)

- 参数说明:

- selfRef:外积扩展矩阵,Device侧的aclTensor,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL,支持非连续的Tensor,数据格式支持ND。

- vec1:外积入参第一向量,Device侧的aclTensor,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL,支持非连续的Tensor,数据格式支持ND。

- vec2:外积入参第二向量,Device侧的aclTensor,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL,支持非连续的Tensor,数据格式支持ND。

- betaOptional:外积扩展矩阵比例因子,Host侧的aclScalar,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL。如果betaOptional为BOOL类型,则self、vec1、vec2可转换的最大类型为BOOL。数据格式支持ND。

- alphaOptional:外积比例因子,Host侧的aclScalar,数据类型支持FLOAT、FLOAT16、DOUBLE、INT8、INT16、INT32、INT64、UINT8、BOOL。如果alphaOptional为BOOL类型,则self、vec1、vec2可转换的最大类型为BOOL。数据格式支持ND。

- workspaceSize:返回用户需要在Device侧申请的workspace大小。

- executor:返回op执行器,包含了算子计算流程。

- 返回值:

返回aclnnStatus状态码,具体参见aclnn返回码。

第一段接口完成入参校验,出现以下场景时报错:

- 返回161001(ACLNN_ERR_PARAM_NULLPTR):传入的selfRef、vec1、vec2是空指针。

- 返回161002(ACLNN_ERR_PARAM_INVALID):

- selfRef、vec1和vec2的数据类型和数据格式不在支持的范围之内。

- vec1和vec2维度不为1,selfRef维度超过2。

- selfRef不能扩展成vec1和vec2外积结果形状。

- betaOptional或者alphaOptional为BOOL类型时,selfRef、vec1、vec2最大类型为非BOOL类型。

- selfRef、vec1、vec2类型都为整型时, betaOptional或者alphaOptional为浮点型。

aclnnInplaceAddr

- 接口定义:

aclnnstatus aclnnInplaceAddr(void *workspace, uint64_t workspaceSize, aclOpExecutor *executor, const aclrtStream stream)

- 参数说明:

- workspace:在Device侧申请的workspace内存起址。

- workspaceSize:在Device侧申请的workspace大小,由第一段接口aclnnInplaceAddrGetWorkspaceSize获取。

- executor:op执行器,包含了算子计算流程。

- stream:指定执行任务的AscendCL stream流。

- 返回值:

返回aclnnStatus状态码,具体参见aclnn返回码。

调用示例

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 |

#include <iostream> #include <vector> #include "acl/acl.h" #include "aclnnop/aclnn_addr.h" #define CHECK_RET(cond, return_expr) \ do { \ if (!(cond)) { \ return_expr; \ } \ } while (0) #define LOG_PRINT(message, ...) \ do { \ printf(message, ##__VA_ARGS__); \ } while (0) int64_t GetShapeSize(const std::vector<int64_t>& shape) { int64_t shape_size = 1; for (auto i : shape) { shape_size *= i; } return shape_size; } void PrintOutResult(std::vector<int64_t> &shape, void** deviceAddr) { auto size = GetShapeSize(shape); std::vector<float> resultData(size, 0); auto ret = aclrtMemcpy(resultData.data(), resultData.size() * sizeof(resultData[0]), *deviceAddr, size * sizeof(float), ACL_MEMCPY_DEVICE_TO_HOST); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("copy result from device to host failed. ERROR: %d\n", ret); return); for (int64_t i = 0; i < size; i++) { LOG_PRINT("result[%ld] is: %f\n", i, resultData[i]); } } int Init(int32_t deviceId, aclrtContext* context, aclrtStream* stream) { // 固定写法,AscendCL初始化 auto ret = aclInit(nullptr); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclInit failed. ERROR: %d\n", ret); return ret); ret = aclrtSetDevice(deviceId); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclrtSetDevice failed. ERROR: %d\n", ret); return ret); ret = aclrtCreateContext(context, deviceId); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclrtCreateContext failed. ERROR: %d\n", ret); return ret); ret = aclrtSetCurrentContext(*context); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclrtSetCurrentContext failed. ERROR: %d\n", ret); return ret); ret = aclrtCreateStream(stream); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclrtCreateStream failed. ERROR: %d\n", ret); return ret); return 0; } template <typename T> int CreateAclTensor(const std::vector<T>& hostData, const std::vector<int64_t>& shape, void** deviceAddr, aclDataType dataType, aclTensor** tensor) { auto size = GetShapeSize(shape) * sizeof(T); // 调用aclrtMalloc申请device侧内存 auto ret = aclrtMalloc(deviceAddr, size, ACL_MEM_MALLOC_HUGE_FIRST); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclrtMalloc failed. ERROR: %d\n", ret); return ret); // 调用aclrtMemcpy将Host侧数据拷贝到device侧内存上 ret = aclrtMemcpy(*deviceAddr, size, hostData.data(), size, ACL_MEMCPY_HOST_TO_DEVICE); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclrtMemcpy failed. ERROR: %d\n", ret); return ret); // 计算连续tensor的strides std::vector<int64_t> strides(shape.size(), 1); for (int64_t i = shape.size() - 2; i >= 0; i--) { strides[i] = shape[i + 1] * strides[i + 1]; } // 调用aclCreateTensor接口创建aclTensor *tensor = aclCreateTensor(shape.data(), shape.size(), dataType, strides.data(), 0, aclFormat::ACL_FORMAT_ND, shape.data(), shape.size(), *deviceAddr); return 0; } int main() { // 1. (固定写法)device/context/stream初始化, 参考AscendCL对外接口列表 // 根据自己的实际device填写deviceId int32_t deviceId = 0; aclrtContext context; aclrtStream stream; auto ret = Init(deviceId, &context, &stream); CHECK_RET(ret == 0, LOG_PRINT("Init acl failed. ERROR: %d\n", ret); return ret); // check根据自己的需要处理 // 2. 构造输入与输出,需要根据API的接口自定义构造 std::vector<int64_t> inputShape = {3, 2}; std::vector<int64_t> vec1Shape = {3}; std::vector<int64_t> vec2Shape = {2}; std::vector<int64_t> outShape = {3, 2}; void* inputDeviceAddr = nullptr; void* vec1DeviceAddr = nullptr; void* vec2DeviceAddr = nullptr; void* outDeviceAddr = nullptr; aclTensor* input = nullptr; aclTensor* vec1 = nullptr; aclTensor* vec2 = nullptr; aclTensor* out = nullptr; aclScalar* beta = nullptr; aclScalar* alpha = nullptr; std::vector<float> inputHostData = {0, 1, 2, 3, 4, 5}; std::vector<float> vec1HostData = {1, 2, 3}; std::vector<float> vec2HostData = {4, 5}; std::vector<float> outHostData = {0, 0, 0, 0, 0, 0}; float betaValue = 1.5f; float alphaValue = 1.5f; ret = CreateAclTensor(inputHostData, inputShape, &inputDeviceAddr, aclDataType::ACL_FLOAT, &input); CHECK_RET(ret == ACL_SUCCESS, return ret); ret = CreateAclTensor(vec1HostData, vec1Shape, &vec1DeviceAddr, aclDataType::ACL_FLOAT, &vec1); CHECK_RET(ret == ACL_SUCCESS, return ret); ret = CreateAclTensor(vec2HostData, vec2Shape, &vec2DeviceAddr, aclDataType::ACL_FLOAT, &vec2); CHECK_RET(ret == ACL_SUCCESS, return ret); ret = CreateAclTensor(outHostData, outShape, &outDeviceAddr, aclDataType::ACL_FLOAT, &out); CHECK_RET(ret == ACL_SUCCESS, return ret); // 创建beta和alpha scalar值 beta = aclCreateScalar(&betaValue, aclDataType::ACL_FLOAT); CHECK_RET(beta != nullptr, return ret); alpha = aclCreateScalar(&alphaValue, aclDataType::ACL_FLOAT); CHECK_RET(alpha != nullptr, return ret); // 3.调用CANN算子库API,需要修改为具体的算子接口 uint64_t workspaceSize = 0; aclOpExecutor* executor; // 调用aclnnAddr第一段接口 ret = aclnnAddrGetWorkspaceSize(input, vec1, vec2, beta, alpha, out, &workspaceSize, &executor); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclnnAddrGetWorkspaceSize failed. ERROR: %d\n", ret); return ret); // 根据第一段接口计算出的workspaceSize申请device内存 void* workspaceAddr = nullptr; if (workspaceSize > 0) { ret = aclrtMalloc(&workspaceAddr, workspaceSize, ACL_MEM_MALLOC_HUGE_FIRST); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("allocate workspace failed. ERROR: %d\n", ret); return ret;); } // 调用aclnnAddr第二段接口 ret = aclnnAddr(workspaceAddr, workspaceSize, executor, stream); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclnnAddr failed. ERROR: %d\n", ret); return ret); // 4. (固定写法)同步等待任务执行结束 ret = aclrtSynchronizeStream(stream); CHECK_RET(ret == ACL_SUCCESS, LOG_PRINT("aclrtSynchronizeStream failed. ERROR: %d\n", ret); return ret); // 5. 获取输出的值,将device侧内存上的结果拷贝至Host侧,需要根据具体API的接口定义修改 PrintOutResult(outShape, &outDeviceAddr); // 6. 释放aclTensor和aclScalar,需要根据具体API的接口定义修改 aclDestroyTensor(input); aclDestroyTensor(vec1); aclDestroyTensor(vec2); aclDestroyTensor(out); return 0; } |