本章节以Atlas 800 推理服务器(型号:3010)+Atlas 300I Pro 推理卡 openEuler 22.03 LTS系统为例说明如何将NPU直通虚拟机,操作步骤中的图片和打印信息仅为示例,请以实际操作界面为准。

前提条件

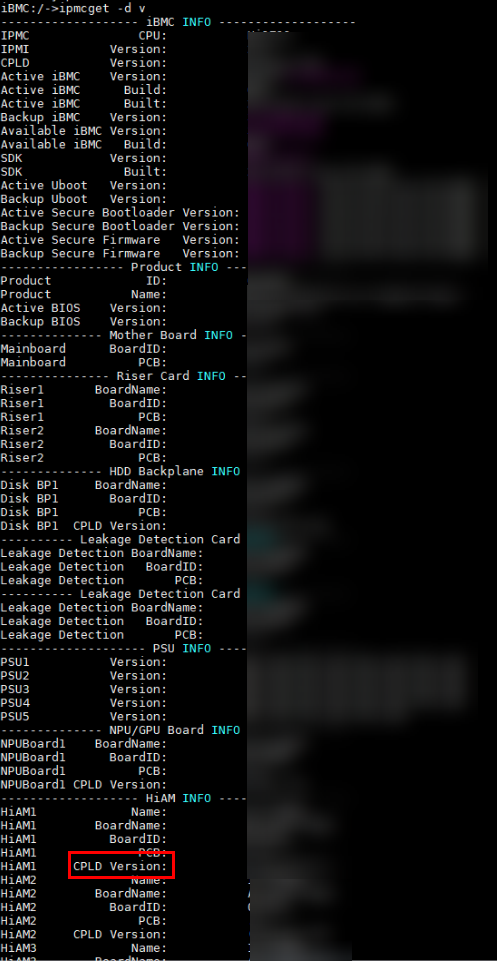

- 对于Atlas 900 A2 PoD 集群基础单元/Atlas 800T A2 训练服务器/Atlas 800I A2 推理服务器,NPU模组CPLD版本需为2.09及以上版本。

- 对于Atlas 200T A2 Box16 异构子框,NPU模组CPLD版本需为2.04及以上版本。

可登录iBMC命令行通过执行ipmcget -d v查询NPU模组CPLD版本,查看如下截图中“CPLD Version”字段。

操作步骤

- 对于Atlas 900 A2 PoD 集群基础单元/Atlas 800T A2 训练服务器/Atlas 800I A2 推理服务器(HCCS款)/Atlas 200T A2 Box16 异构子框,需要对指定NPU芯片进行降P启动。

- 用户以Administrator账号登录设备iBMC后台。

- 执行如下命令,将服务器下电。

WARNING: A forced power-off may damage programs or unsaved data of the server. Do you want to continue?[Y/N]:

输入“Y”:

Control fru0 forced power off successfully.

- 执行如下命令,查询NPU芯片隔离状态。

NPU1 Status: Enabled NPU2 Status: Enabled NPU3 Status: Enabled NPU4 Status: Enabled NPU5 Status: Enabled NPU6 Status: Enabled NPU7 Status: Enabled NPU8 Status: Enabled

- 状态显示为“Enabled”,表示NPU芯片未被隔离,需要进行后续操作对指定NPU芯片进行降P启动。

- 状态显示为“Isolation”,表示NPU芯片已被隔离,则可跳过后续操作。

- 对于Atlas 900 A2 PoD 集群基础单元/Atlas 800T A2 训练服务器/Atlas 800I A2 推理服务器(HCCS款),iBMC后台上查询到的NPU1~NPU8分别与物理机上通过npu-smi info命令查询到的NPU0~NPU7相对应。

- 对于Atlas 200T A2 Box16 异构子框,iBMC后台上查询到的NPU1~NPU16分别与物理机上通过npu-smi info命令查询到的NPU0~NPU15相对应。

- 执行如下命令,修改指定NPU芯片隔离状态。

ipmcset -d npuisolation -v 3 1

此处以NPU ID为3举例,命令中“1”表示NPU芯片隔离,即降P启动;“0”表示NPU芯片正常启动。

如果需要修改多张NPU芯片隔离状态,依次执行如上步骤即可。

- 执行如下命令,查询NPU芯片隔离状态。

NPU1 Status: Enabled NPU2 Status: Enabled NPU3 Status: Isolation NPU4 Status: Enabled NPU5 Status: Enabled NPU6 Status: Enabled NPU7 Status: Enabled NPU8 Status: Enabled

- 执行如下命令,将服务器上电。

WARNING: The operation may have many adverse effects. Do you want to continue?[Y/N]:

输入“Y”:

Control fru0 power on successfully.

- 登录物理机,执行如下命令,查看环境上NPU芯片的PCIe信息,确定待直通的NPU芯片的PCIe信息。后续步骤以3b:00.0为例。lspci | grep <device_name>

lspci | grep d500 3b:00.0 Processing accelerators: Huawei Technologies Co., Ltd. Device d500 (rev 23) 3c:00.0 Processing accelerators: Huawei Technologies Co., Ltd. Device d500 (rev 23) 5e:00.0 Processing accelerators: Huawei Technologies Co., Ltd. Device d500 (rev 23) 86:00.0 Processing accelerators: Huawei Technologies Co., Ltd. Device d500 (rev 23) 87:00.0 Processing accelerators: Huawei Technologies Co., Ltd. Device d500 (rev 23) af:00.0 Processing accelerators: Huawei Technologies Co., Ltd. Device d500 (rev 23) d8:00.0 Processing accelerators: Huawei Technologies Co., Ltd. Device d500 (rev 23)

lspci | grep <device_name>命令查询的BDF码的顺序和NPU ID的顺序可能不是一一对应的。

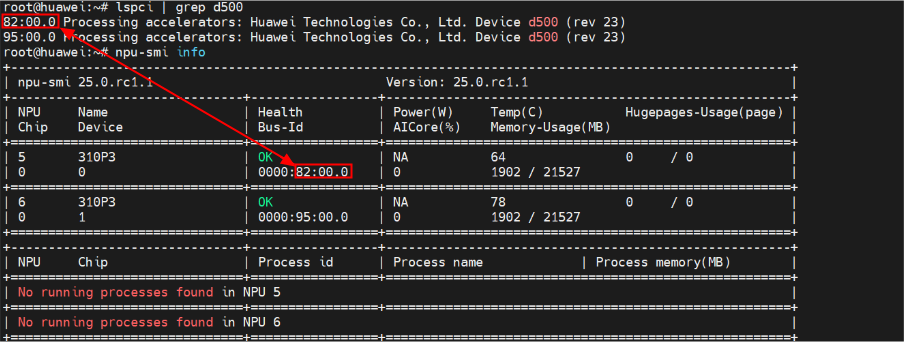

lspci | grep <device_name>命令查询的BDF码对应npu-smi info命令查询的Bus ID的后三段,如图1所示。

- 执行如下命令,获取待直通的NPU芯片的'wwnn,wwpn' 格式的设备名称(例如:pci_0000_3b_00_0)。virsh nodedev-list --tree | grep pci

virsh nodedev-list --tree | grep pci ...... +- pci_0000_3a_00_0 | +- pci_0000_3b_00_0 +- pci_0000_3a_02_0 | +- pci_0000_3c_00_0 +- pci_0000_3a_05_0 +- pci_0000_3a_05_2 +- pci_0000_3a_05_4 ......

- 执行以下命令,获取待直通NPU芯片的xml信息,确认NPU芯片的domain、bus、slot、function字段的值。

virsh nodedev-dumpxml <device>

virsh nodedev-dumpxml pci_0000_3b_00_0 <device> <name>pci_0000_3b_00_0</name> <path>/sys/devices/pci0000:3a/0000:3a:00.0/0000:3b:00.0</path> <parent>pci_0000_3a_00_0</parent> <driver> <name>devdrv_device_driver</name> </driver> <capability type='pci'> <class>0x120000</class> <domain>0</domain> <bus>59</bus> <slot>0</slot> <function>0</function> <product id='0xd500'/> <vendor id='0x19e5'>Huawei Technologies Co., Ltd.</vendor> <iommuGroup number='39'> <address domain='0x0000' bus='0x3b' slot='0x00' function='0x0'/> </iommuGroup> <numa node='0'/> <pci-express> <link validity='cap' port='0' speed='16' width='16'/> <link validity='sta' speed='8' width='8'/> </pci-express> </capability> </device> - 执行如下命令,查询虚拟机列表。

virsh list --all

Id Name State ---------------------------- 1 openeuler running

- 执行如下命令,关闭目标虚拟机。

virsh shutdown <domain>

virsh shutdown openeuler Domain openeuler is being shutdown

- 修改目标虚拟机配置,将NPU芯片直通到目标虚拟机。

- 执行如下命令,打开目标虚拟机的配置文本。

ubuntu系统可能会出现如下提示,请按照提示选择编辑器。

Select an editor. To change later, run 'select-editor'. 1. /bin/nano <---- easiest 2. /usr/bin/vim.basic 3. /usr/bin/vim.tiny 4. /bin/ed Choose 1-4 [1]:

- 在配置文本的devices域中添加待直通的NPU芯片信息,格式如下:

<hostdev mode='subsystem' type='pci' managed='yes'> <source> <address domain='0x0000' bus='0x3b' slot='0x00' function='0x0'/> </source> </hostdev> - (可选)仅Atlas 900 A3 SuperPoD 超节点需要执行此步骤。配置大页内存,在配置文本的domain域中添加如下内容,其中page size参数根据•Debian10(veLinux 1.3)配置...配置文件/etc/default/grub中实际配置的内存大小进行修改。

<memoryBacking> <hugepages> <page size='16777216' unit='KiB'/> </hugepages> </memoryBacking>执行如下命令,配置静态大页。以虚拟机配置内存大小64G,大页内存16G为例,其中4表示虚拟机配置的内存大小÷大页内存。

echo 4 > /sys/devices/system/node/node0/hugepages/hugepages-16777216kB/nr_hugepages

echo 4 > /sys/devices/system/node/node1/hugepages/hugepages-16777216kB/nr_hugepages

echo 4 > /sys/devices/system/node/node2/hugepages/hugepages-16777216kB/nr_hugepages

echo 4 > /sys/devices/system/node/node3/hugepages/hugepages-16777216kB/nr_hugepages

- (可选)仅A200T A3 Box8 超节点服务器+Tlinux3.1需要执行此步骤。设备直通numa配置,在配置文本的domain域中添加如下内容。参考如下样例,具体设备domain、bus、slot、function字段取值为步骤4中查询到的对应字段的值。

<controller type="sata" index="0"> <alias name="ide"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x1f" function="0x2"/> </controller> <controller type="pci" index="0" model="pcie-root"> <alias name="pcie.0"/> </controller> <controller type="pci" index="1" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="1" port="0x10"/> <alias name="pci.1"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x02" function="0x0" multifunction="on"/> </controller> <controller type="pci" index="2" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="2" port="0x11"/> <alias name="pci.2"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x02" function="0x1"/> </controller> <controller type="pci" index="3" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="3" port="0x12"/> <alias name="pci.3"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x02" function="0x2"/> </controller> <controller type="pci" index="4" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="4" port="0x13"/> <alias name="pci.4"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x02" function="0x3"/> </controller> <controller type="pci" index="5" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="5" port="0x14"/> <alias name="pci.5"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x02" function="0x4"/> </controller> <controller type="pci" index="6" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="6" port="0x15"/> <alias name="pci.6"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x02" function="0x5"/> </controller> <controller type="pci" index="7" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="7" port="0x16"/> <alias name="pci.7"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x02" function="0x6"/> </controller> <controller type="pci" index="8" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="8" port="0x17"/> <alias name="pci.8"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x02" function="0x7"/> </controller> <controller type="pci" index="9" model="pcie-expander-bus"> <model name="pxb-pcie"/> <target busNr="80"> <node>0</node> </target> <alias name="pci.9"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x03" function="0x0" multifunction="on"/> </controller> <controller type="pci" index="10" model="pcie-expander-bus"> <model name="pxb-pcie"/> <target busNr="160"> <node>1</node> </target> <alias name="pci.10"/> <address type="pci" domain="0x0000" bus="0x00" slot="0x04" function="0x0" multifunction="on"/> </controller> <controller type="pci" index="11" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="11" port="0x0"/> <alias name="pci.11"/> <address type="pci" domain="0x0000" bus="0x09" slot="0x00" function="0x0"/> </controller> <controller type="pci" index="12" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="12" port="0x1"/> <alias name="pci.12"/> <address type="pci" domain="0x0000" bus="0x09" slot="0x01" function="0x0"/> </controller> <controller type="pci" index="13" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="13" port="0x2"/> <alias name="pci.13"/> <address type="pci" domain="0x0000" bus="0x09" slot="0x02" function="0x0"/> </controller> <controller type="pci" index="14" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="14" port="0x3"/> <alias name="pci.14"/> <address type="pci" domain="0x0000" bus="0x09" slot="0x03" function="0x0"/> </controller> <controller type="pci" index="15" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="15" port="0x4"/> <alias name="pci.15"/> <address type="pci" domain="0x0000" bus="0x09" slot="0x04" function="0x0"/> </controller> <controller type="pci" index="16" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="16" port="0x5"/> <alias name="pci.16"/> <address type="pci" domain="0x0000" bus="0x09" slot="0x05" function="0x0"/> </controller> <controller type="pci" index="17" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="17" port="0x6"/> <alias name="pci.17"/> <address type="pci" domain="0x0000" bus="0x09" slot="0x06" function="0x0"/> </controller> <controller type="pci" index="18" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="18" port="0x7"/> <alias name="pci.18"/> <address type="pci" domain="0x0000" bus="0x09" slot="0x07" function="0x0"/> </controller> <controller type="pci" index="19" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="19" port="0x8"/> <alias name="pci.19"/> <address type="pci" domain="0x0000" bus="0x0a" slot="0x00" function="0x0"/> </controller> <controller type="pci" index="20" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="20" port="0x9"/> <alias name="pci.20"/> <address type="pci" domain="0x0000" bus="0x0a" slot="0x01" function="0x0"/> </controller> <controller type="pci" index="21" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="21" port="0xa"/> <alias name="pci.21"/> <address type="pci" domain="0x0000" bus="0x0a" slot="0x02" function="0x0"/> </controller> <controller type="pci" index="22" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="22" port="0xb"/> <alias name="pci.22"/> <address type="pci" domain="0x0000" bus="0x0a" slot="0x03" function="0x0"/> </controller> <controller type="pci" index="23" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="23" port="0xc"/> <alias name="pci.23"/> <address type="pci" domain="0x0000" bus="0x0a" slot="0x04" function="0x0"/> </controller> <controller type="pci" index="24" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="24" port="0xd"/> <alias name="pci.24"/> <address type="pci" domain="0x0000" bus="0x0a" slot="0x05" function="0x0"/> </controller> <controller type="pci" index="25" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="25" port="0xe"/> <alias name="pci.25"/> <address type="pci" domain="0x0000" bus="0x0a" slot="0x06" function="0x0"/> </controller> <controller type="pci" index="26" model="pcie-root-port"> <model name="pcie-root-port"/> <target chassis="26" port="0xf"/> <alias name="pci.26"/> <address type="pci" domain="0x0000" bus="0x0a" slot="0x07" function="0x0"/> </controller> <controller type="virtio-serial" index="0"> <alias name="virtio-serial0"/> <address type="pci" domain="0x0000" bus="0x03" slot="0x00" function="0x0"/> </controller>

- 执行如下命令,打开目标虚拟机的配置文本。

- 执行如下命令,打开目标虚拟机。

对于Atlas 800 训练服务器(型号:9000),若关闭虚拟机后需要短时间内重新打开虚拟机,需确保在物理机下能够执行npu-smi info命令查询到对应的直通NPU设备,请等待至少20s后再打开虚拟机。

virsh start <domain>virsh start openeuler Domain openeuler started

- 登录到目标虚拟机。

在物理机上通过ssh root@xxx 命令登录目标虚拟机(xxx为目标虚拟机IP地址,如:192.168.1.199)

- 执行如下命令查看直通的NPU芯片PCIe信息。

lspci | grep <device_name>

lspci | grep d500 3b:00.0 Processing accelerators: Huawei Technologies Co., Ltd. Device d500 (rev 23)

表1 参数说明 参数

说明

device_name

NPU芯片的PCIe名称。

- Atlas 300I Pro 推理卡/Atlas 300V Pro 视频解析卡Atlas 300V 视频解析卡/Atlas 300I Duo 推理卡<device_name>取值为d500

- Atlas 800 训练服务器(型号:9000)<device_name>取值为d801

- Atlas 200T A2 Box16 异构子框/Atlas 800T A2 训练服务器/Atlas 800I A2 推理服务器/Atlas 900 A2 PoD 集群基础单元<device_name>取值为d802

- Atlas 200I A2 加速模块<device_name>取值为d105

- Atlas 900 A3 SuperPoD 超节点/A200T A3 Box8 超节点服务器的<device_name>取值为d803

domain

目标虚拟机名称。可以通过virsh list --all命令查看。

device

'wwnn,wwpn' 格式的设备名称,例如:pci_0000_3b_00_0。